Introduction

“English-to-SQL” is a hot topic right now—and for good reason. It’s the dream of every data analyst, data engineer, and data-savvy team: imagine asking a question in plain English and having an LLM not only understand it, but also run the exact SQL query needed to get the answer from your database or data lake.

There are many ways to implement English-to-SQL, and each approach comes with different tradeoffs in complexity, accuracy, and contextual awareness. What we’re showcasing here is just one practical and powerful path—focused on giving the LLM rich, structured context using dbt and LangChain.

Our dbt-to-English project laid the foundation by giving LLMs access to your dbt metadata, real SQL object names, and custom prompts. Now, we’ve taken it further: by integrating LangChain’s QuerySQLDatabaseTool, your LLM-powered assistant can not only understand your data environment, but actually connect to your database and run live SQL queries based on natural language inputs.

If you haven’t read our previous blog post about dbt-to-English, we recommend starting there—it sets the stage for what’s next.

Let’s explore how this new feature works in practice.

Getting Started: Connecting to the Database and Generating Fictional Sample Data

Let’s begin with a simple example to understand how the system works.

1. Configure Your Environment

Update your private.env file by adding a new key from the private.env-template file, for example:

DATABASE_URI=...You can either use your own database or connect to the provided local one:

DATABASE_URI=postgresql://postgres:postgres@postgres:5432/postgres2. Launch the Environment

Run the dbt-to-English environment (GitHub repo) using Docker:

docker compose up --build3. Run dbt to generate source tables and transformation models.

cd DbtExampleProject

dbt build4. Enable Database Connection in UI

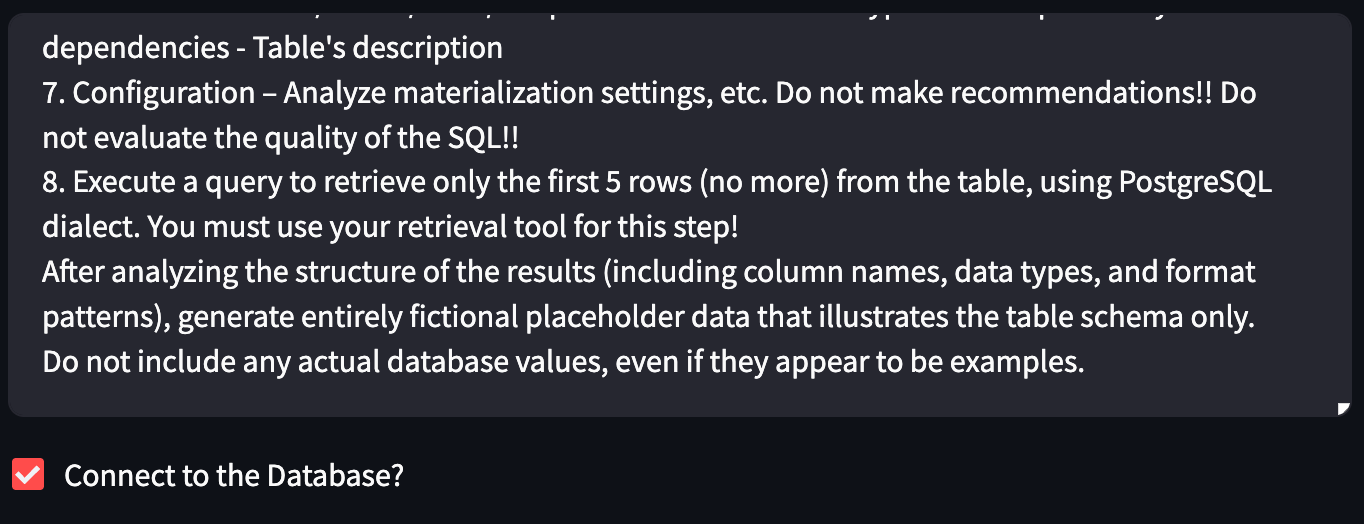

In the app interface, select the catalog and manifest files. Then proceed to the System Prompt section, where a new checkbox labeled "Connect to the Database?" appears below the prompt text. Check this box.

Now add the following instruction to your prompt:

Execute a query to retrieve only the first 5 rows (no more) from the table, using PostgreSQL dialect. You must use your retrieval tool for this step!

After analyzing the structure of the results (including column names, data types, and format patterns), generate entirely fictional placeholder data that illustrates the table schema only.

Do not include any actual database values, even if they appear to be examples.

5. Run the Script

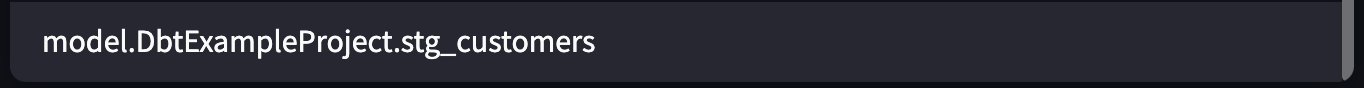

Let’s use the stg_customers model for this test. The system connects to the database via the provided DATABASE_URI, runs a query to fetch the first 5 rows, analyzes the schema, and produces fake—but structurally accurate—data.

6. Verify Query Execution

Inspect the PostgreSQL logs to confirm that the query was executed. The logs show that the tool did connect and access the table, which confirms the integration is functioning correctly.

7. Compare with Actual Data

To verify the accuracy of the AI's schema understanding, run the same query manually:

SELECT * FROM dbt.stg_customers sc LIMIT 5;As expected, the tool generated entirely fictional data that mirrors the structure of the actual records. This proves that the placeholder data is schema-faithful and safe for demonstration or documentation purposes. Data analysts, product managers, or other non-technical users can now explore data structures confidently, without any risk of exposing real production data.

Advanced Validation Use Case

Now, let’s explore a more advanced and practical use case—identifying outdated dbt descriptions.

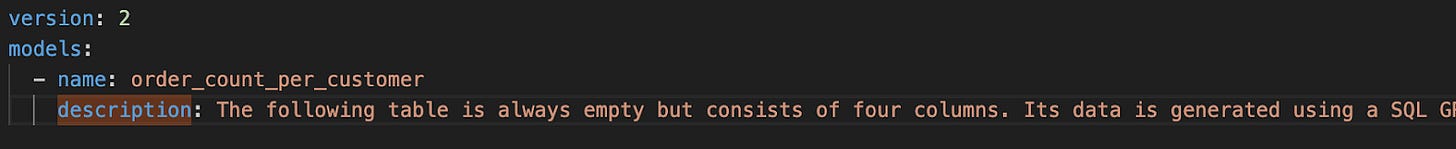

1. Add a Description with Known Issues

We’ll begin by editing the schema.yml file to add a description for the order_count_per_customer model. Intentionally include two incorrect statements and one correct one. For example:

“The following table is always empty but consists of four columns. Its data is generated using a SQL GROUP BY clause.”

2. Refresh Metadata

Restart the docker compose process to ensure the catalog and manifest files are regenerated with the updated model description:

docker compose downdocker compose up --buildThen, reselect the updated catalog and manifest files in the interface.

3. Update the Prompt for Comparison

Modify the prompt to instruct the LLM to perform a structured validation:

Compare the actual behavior of the current model with its dbt description (documentation).

If needed, use the QuerySQLDatabaseTool with the PostgreSQL dialect to validate the implementation.

Return your findings in a table with two columns:

Description Item – a specific claim or element from the dbt model's description

Status – either Correct if the behavior matches the description, or Incorrect if it does not

The table must include only the items that can be explicitly verified as correct or incorrect.

Avoid listing any assumptions, inferred logic, or undocumented behaviors.

Make sure to check the "Connect to the Database" checkbox before running the model.

4. Run the LLM

Execute the process.

As observed, the output table is highly accurate. The LLM correctly interpreted the dbt description, connected to the database, and validated each point:

It verified that the table is not empty.

It confirmed the presence of four columns.

It validated the use of a SQL GROUP BY clause.

This confirms that the LLM can reliably detect outdated or incorrect documentation by cross-referencing dbt metadata with actual model behavior—providing a valuable tool for maintaining accurate, trustworthy data documentation.

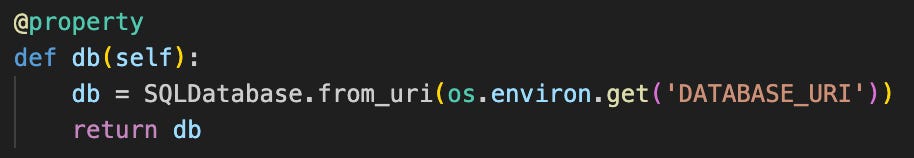

How It Works Under the Hood

Let’s break down how this functionality is implemented from a technical perspective.

The core logic resides in the following file within our repository:

fastapi/server/tr_dbt_to_english.py

To enable database interaction, we utilize the QuerySQLDatabaseTool from the langchain_community package.

This tool is initialized with a SQLDatabase object, which can be constructed using the SQLDatabase.from_uri() method.

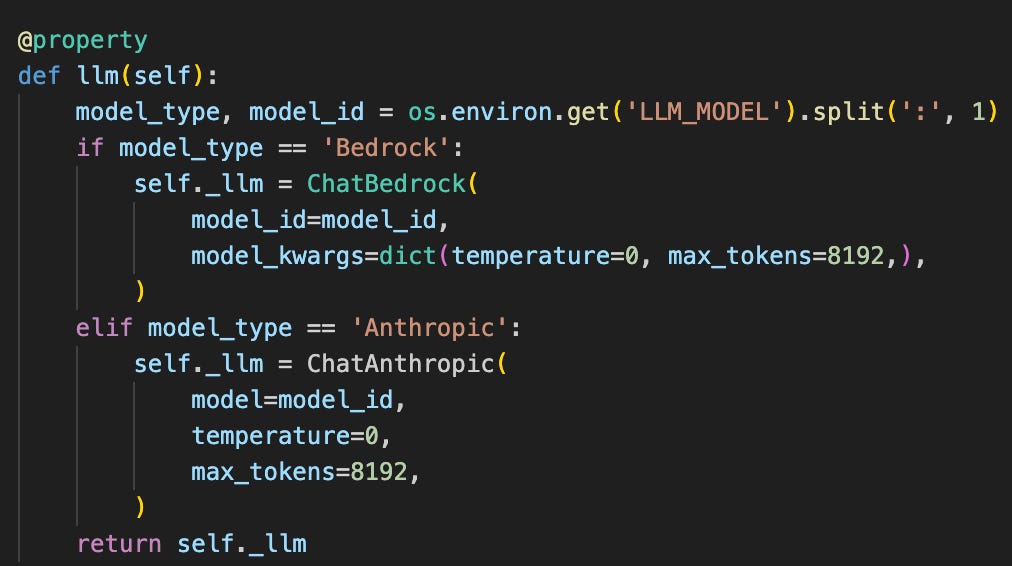

In addition to setting up the database connection, we define the LLM Chat instance—this serves as the "brain" behind the operation.

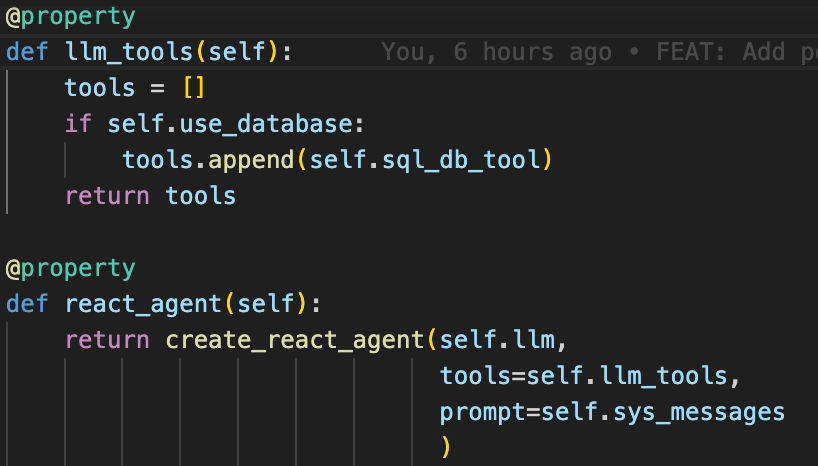

Once the QuerySQLDatabaseTool is ready, we configure a ReAct agent (LangChain tutorial). This agent integrates the LLM and the tools it can use (such as querying the database).

Finally, we use self.react_agent.stream to stream the model’s response, allowing it to dynamically interact with the database and return results in real time.

Conclusion: From Metadata to Meaningful Queries

What began as a way to help LLMs understand dbt metadata has now evolved into a practical, end-to-end system for transforming natural language into real, executable SQL—accurately and safely. By combining the structure of dbt, the flexibility of LangChain, and the power of live database connectivity, we've created a workflow that not only interprets intent but validates it against real data sources. Whether you're verifying outdated documentation, previewing schema with fictional data, or simply trying to bridge the gap between humans and databases, this integration shows what's possible when metadata, modeling, and AI work together. And best of all—it’s reproducible, extensible, and ready for your data team to explore.