Introduction

A few weeks ago, we introduced Airflow MCP from Hipposys. Since then, we've fine-tuned its capabilities to better align with real-world user needs. In this post, we’ll walk you through three major updates: Safe Mode for secure operation, enhanced controls to manage DAG state, and a new way to predict upcoming DAG runs using our Airflow Schedule Insights plugin.

Run with Confidence: Safe Mode

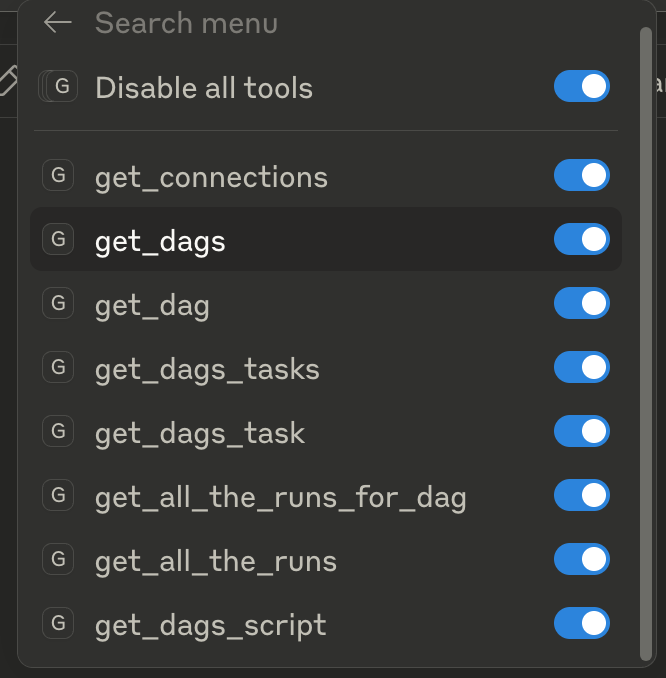

We realized that many users—especially those working with production Airflow instances—need to be certain that MCP won’t inadvertently alter DAG states. Even if your Airflow user has permission to run, pause, or delete DAGs, you may want to use MCP strictly for read-only access. That’s why Safe Mode is now the default behavior.

In Safe Mode, only informational (GET) functions are available, ensuring you can inspect your Airflow environment without risk. To enable write capabilities (e.g. triggering or modifying DAGs), you can explicitly opt in by setting the POST_MODE environment variable.

To do this, add the following to your claude_desktop_config.json:

{

"mcpServers": {

"airflow_mcp": {

"args": [

"run",

"-i",

"--rm",

"-e",

"airflow_api_url",

"-e",

"airflow_username",

"-e",

"airflow_password",

"-e",

"POST_MODE",

"hipposysai/airflow-mcp:latest"

],

"command": "docker",

"env": {

"airflow_api_url": "http://host.docker.internal:8088/api/v1",

"airflow_username": "airflow",

"airflow_password": "airflow",

"POST_MODE": "true"

}

}

}

}Once enabled, you’ll have access to all functions—including state-changing operations.

Until then, rest assured: by default, MCP operates in a safe, read-only mode, so you can explore and monitor without risking production disruptions or unscheduled runs.

Pause DAGs Before the Storm

We've all been there: an unexpected (or even expected) outage hits, and you need to urgently pause all Airflow DAGs. So you go through them one by one… but wait—some DAGs were already paused. Should you manually note them down just to restore their state afterward? That’s tedious—and error-prone.

Not anymore.

Thanks to the new change_dags_pause_status method, this process is now streamlined and reliable.

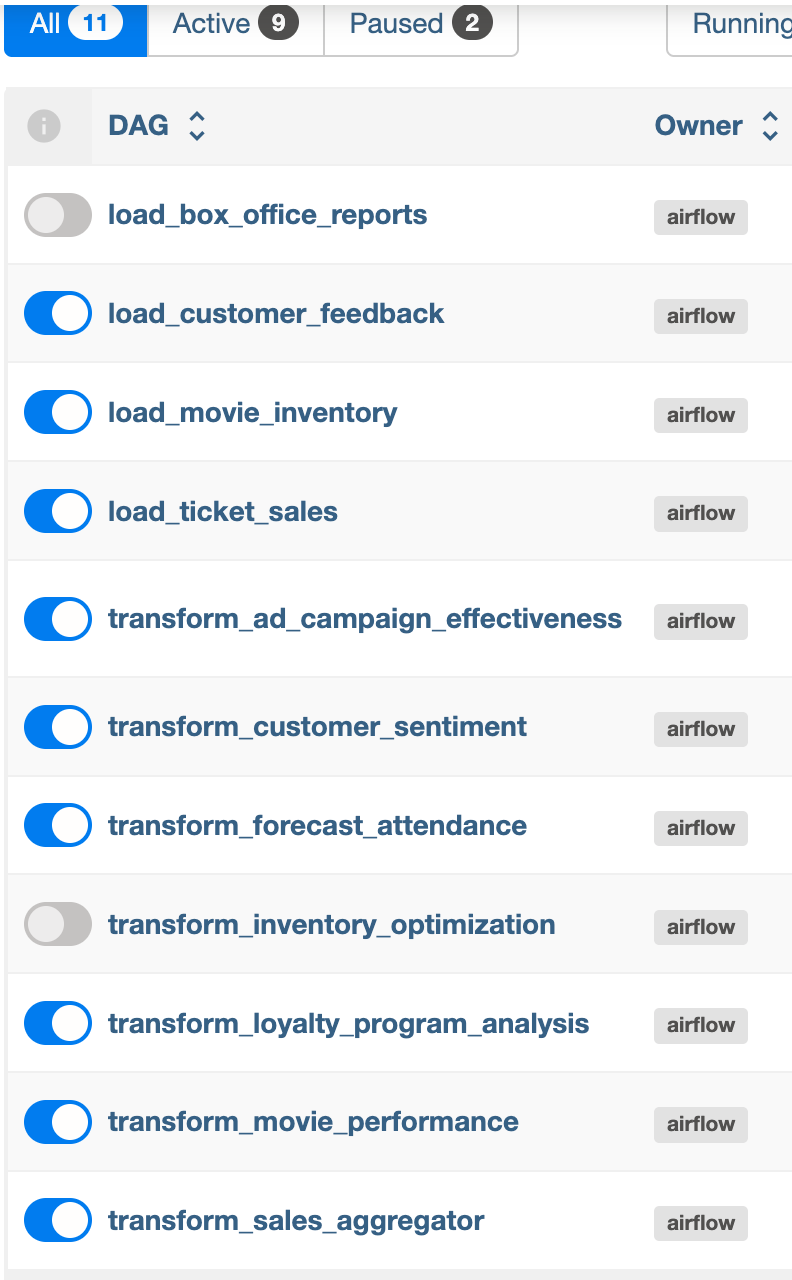

Note: The Airflow cluster I'm working with is deployed from our GitHub repo: hipposys-ltd/airflow-mcp.

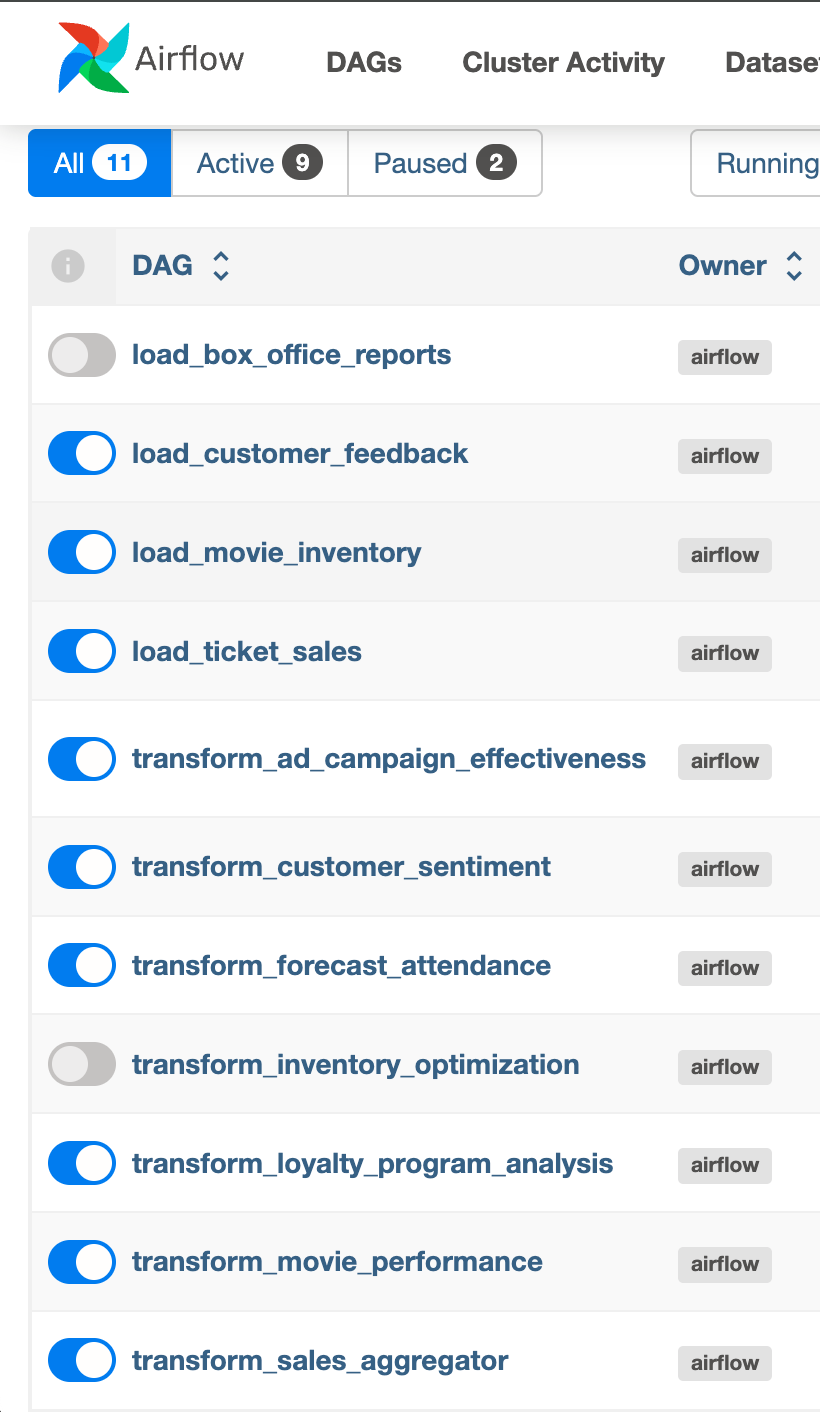

Right now, here’s our current state:

Let’s use MCP to prepare for the outage.

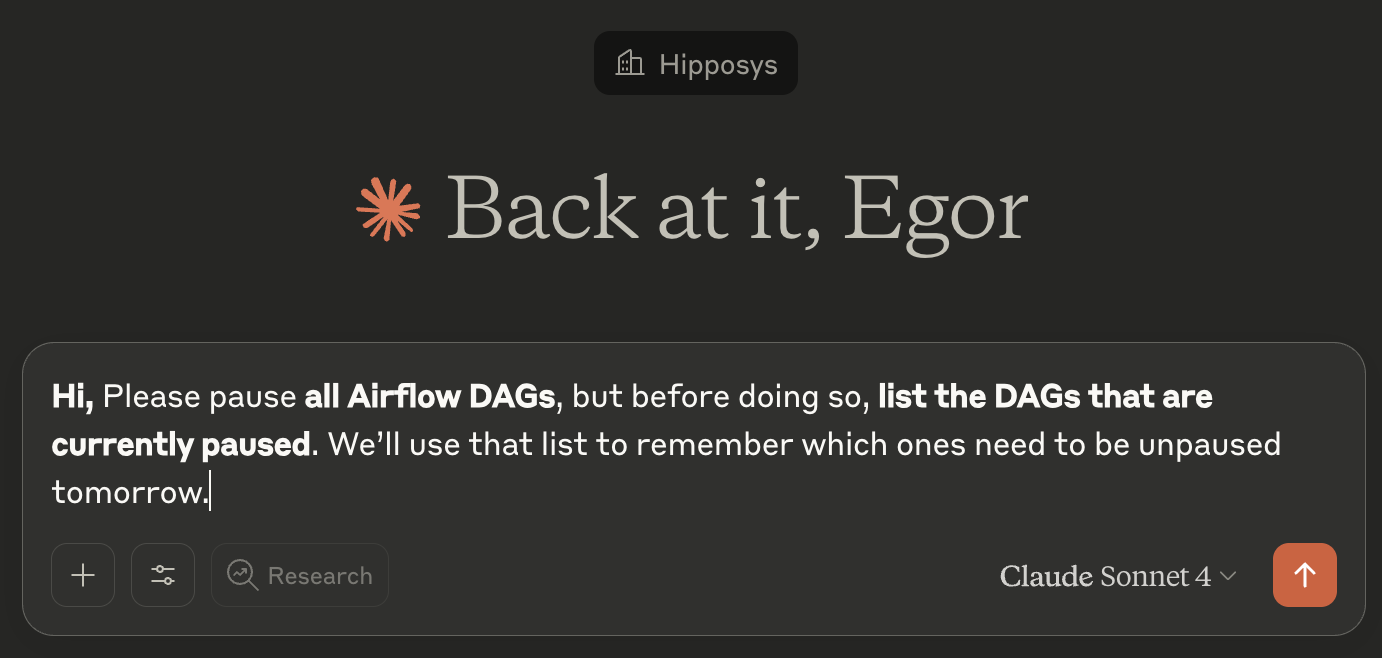

Start with the following prompt:

"Hi, please pause all Airflow DAGs, but before doing so, list the DAGs that are currently paused. We’ll use that list to remember which ones need to be unpaused tomorrow."

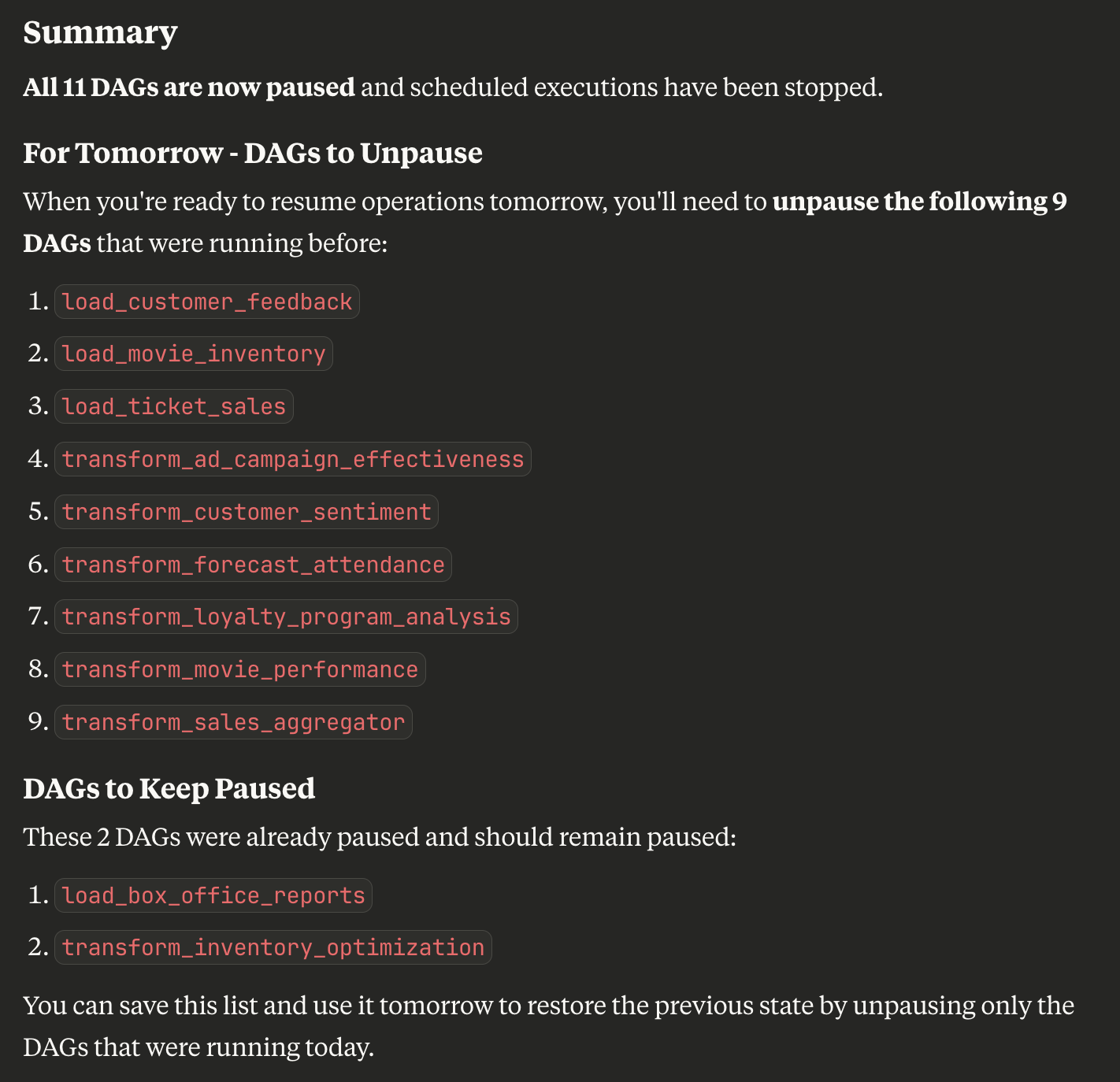

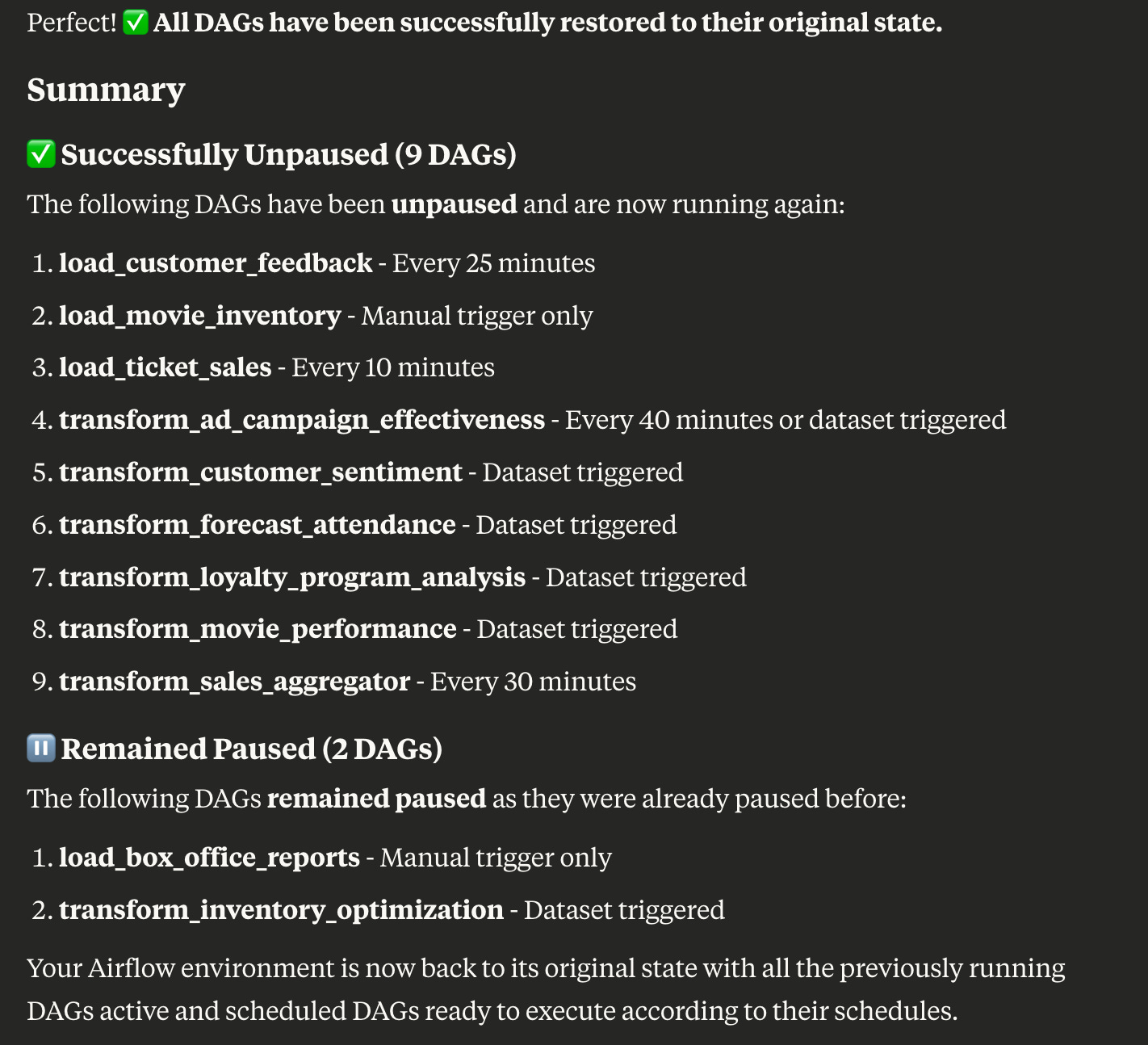

As you can see, all DAGs have been successfully paused.

Now comes the tricky part: restoring normal operations without unpausing DAGs that were paused before the outage.

Run:

"Great. Now please unpause the DAGs, but make sure to leave the ones that were already paused as they are."

And just like that—done.

It only took two prompts to handle a full-cluster pause and restore cycle, cleanly and safely.

When Will It Run?

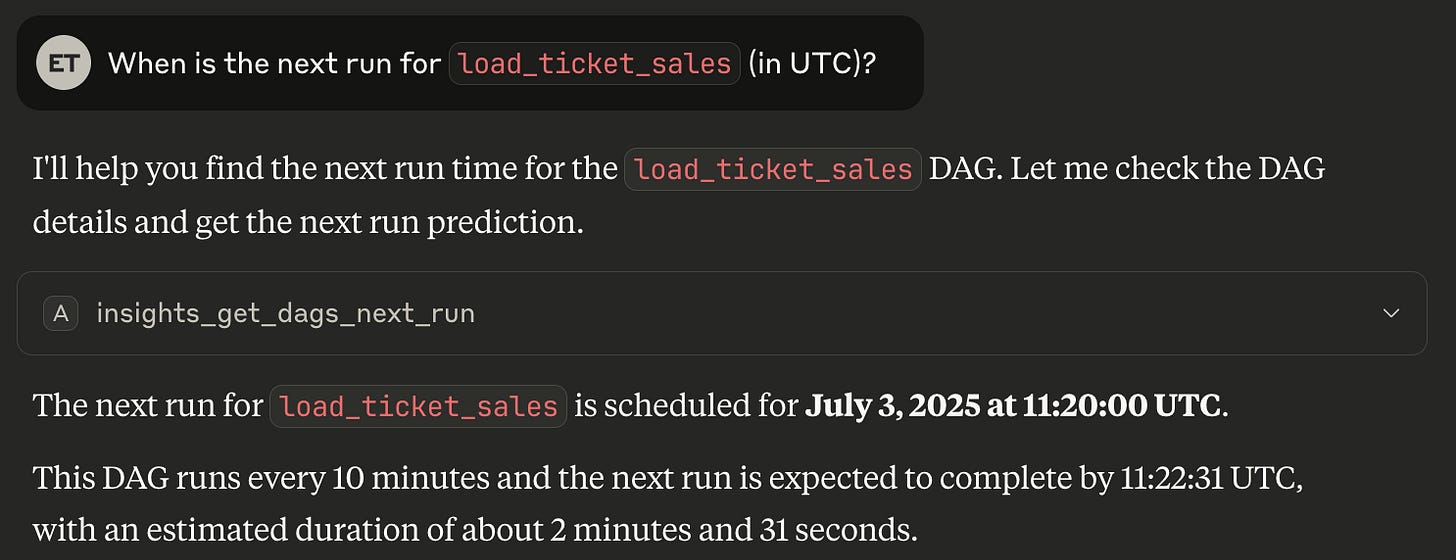

Let’s start with something simple: finding out when load_ticket_sales will run next.

To do that, we’ll need to update claude_desktop_config.json once again by enabling Airflow Insights Mode:

{

"mcpServers": {

"airflow_mcp": {

"args": [

"run",

"-i",

"--rm",

"-e",

"airflow_api_url",

"-e",

"airflow_username",

"-e",

"airflow_password",

"-e",

"POST_MODE",

"-e",

"AIRFLOW_INSIGHTS_MODE",

"hipposysai/airflow-mcp:latest"

],

"command": "docker",

"env": {

"airflow_api_url": "http://host.docker.internal:8088/api/v1",

"airflow_username": "airflow",

"airflow_password": "airflow",

"POST_MODE": "true",

"AIRFLOW_INSIGHTS_MODE": "true"

}

}

}

}⚠️ Note: Make sure the Airflow Schedule Insights plugin is installed in your Airflow instance for this to work.

Now, restart Claude Desktop and ask:

“When is the next run for load_ticket_sales (in EST)?”

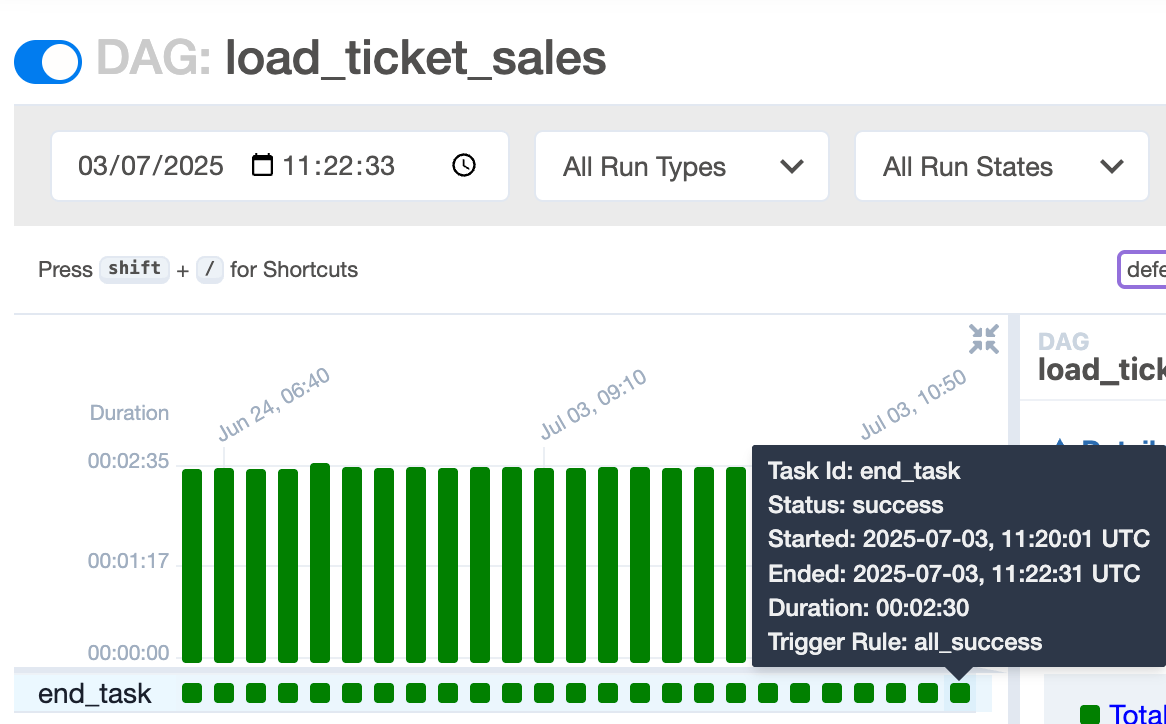

After a short wait, you’ll get a detailed answer including the start time, end time, and expected duration—and it’s accurate. But that’s easy—load_ticket_sales is a scheduled DAG.

Let’s go a step further and try something more dynamic.

Event-Driven DAG Prediction

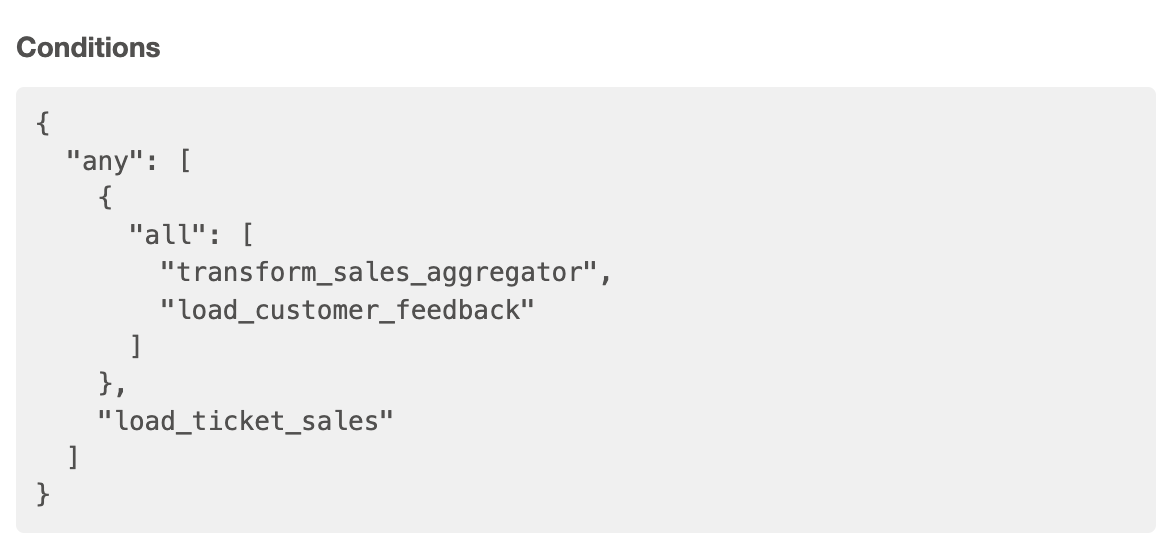

Now let’s look at an event-driven DAG with no fixed schedule: transform_customer_sentiment.

This DAG is triggered when:

either transform_sales_aggregator and load_customer_feedback complete,

or load_ticket_sales finishes on its own.

According to Claude, the next trigger will come from load_ticket_sales. Let’s wait and see…

And yes—it was triggered just one second later than predicted and finished two seconds later than expected. Even better, Claude correctly identified that load_ticket_sales was the trigger.

Querying Multiple DAGs at Once

Now let’s try asking about several DAGs in one go:

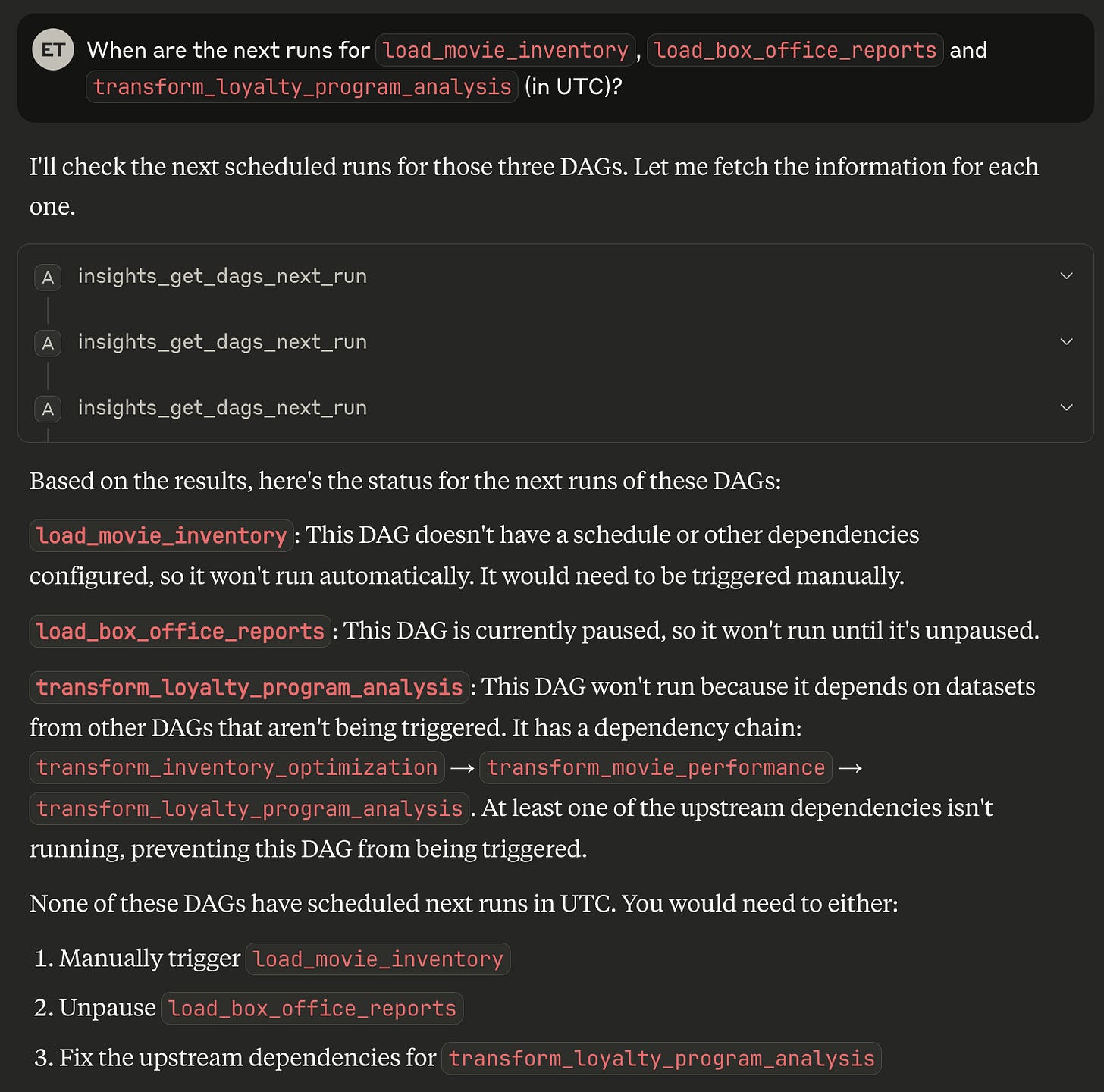

“When are the next runs for load_movie_inventory, load_box_office_reports, and transform_loyalty_program_analysis (in UTC)?”

Claude replies—none of them will run, and that’s correct!

With just a few configuration changes, you can now monitor and predict future DAG runs directly from Claude Desktop—scheduled or event-driven. No guesswork, no surprises.

Summary: Next-Level DAG Observability and Control

With the latest updates to Airflow MCP, Claude Desktop evolves from a smart interface into a powerful control and observability tool for Airflow users. You can now operate in Safe Mode for peace of mind in production, pause and restore DAG states safely during outages, and even predict when DAGs—scheduled or event-driven—will run next, thanks to the new Airflow Schedule Insights integration. Whether you're running a complex pipeline or preparing for maintenance, these upgrades ensure you're always one step ahead—with just a couple of prompts.