Introduction

Unless you’ve been living under a rock, you’ve probably heard of MCP by now. In a nutshell, it’s a way to add extra functionality to AI systems like Claude Desktop, by giving them new “tools” that they can use. The way to do this is to create something called an MCP server, which provides the AI system with a list of new functionality.

At Hipposys, we built a custom MCP server for Apache Airflow that can transform how teams interact with their orchestration platform. Our implementation enables you to query pipeline statuses, troubleshoot failures, retrieve DAG information, and trigger different DAGs depending on their statuses.

This article will guide you through setting up our MCP server locally, allowing you to build powerful AI assistants that can manage your Airflow environment with simple language commands—no more complex UI navigation or code modifications required.

What makes this especially powerful is that it opens up Airflow insights to a wider range of users. Product managers, customer-facing teams, and other stakeholders—who may not have access to Airflow or feel comfortable using it—can now get clear answers about which jobs ran, failed, or succeeded. This enables better collaboration across teams and reduces the dependency on engineering for operational insights.

Check out our repository at https://github.com/hipposys-ltd/airflow-mcp to get started with the implementation.

Running Airflow MCP locally

Let's start with running MCP in Claude Desktop. You can connect to an existing Airflow instance if you have permissions for it. If you don't have a running Airflow environment or just want to test things out, we’ve provided a demo Airflow env in the Airflow MCP repository - you can clone the repo https://github.com/hipposys-ltd/airflow-mcp and run just airflow.

Installing Just on Mac

You can install Just on macOS using Homebrew:

brew install justJust is a command runner that executes commands defined in a justfile. It's similar to Make but with a simpler syntax and additional features, making it ideal for automating project tasks like our Airflow setup.

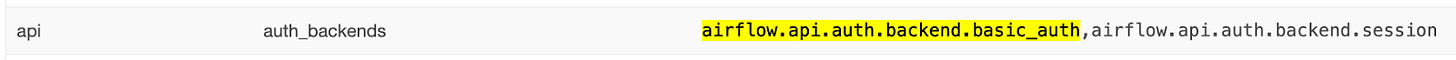

Important: If you're using your own Airflow cluster, make sure the REST API is enabled with Basic Auth.

You can enable it by setting the following environment variable in your Airflow configuration:

AIRFLOW__API__AUTH_BACKEND=airflow.api.auth.backend.basic_authMore details can be found in the Airflow API Authentication documentation.

Now, open Claude Desktop, go to Settings, select the Developer tab and edit the MCP config. Paste the following configuration. Once done, restart Claude Desktop:

{

"mcpServers": {

"airflow_mcp": {

"args": [

"run",

"-i",

"--rm",

"-e",

"airflow_api_url",

"-e",

"airflow_username",

"-e",

"airflow_password",

"hipposysai/airflow-mcp:latest"

],

"command": "docker",

"env": {

"airflow_api_url": "http://host.docker.internal:8088/api/v1",

"airflow_password": "airflow",

"airflow_username": "airflow"

}

}

}

}This MCP setting requires Docker to be installed as it will use our image (hipposysai/airflow-mcp) to run the Airflow MCP server.

Change airflow_api_url, airflow_username and airflow_password if you have your own Airflow cluster; otherwise leave them as shown in the example.

Once configured, open Claude Desktop and ask "What DAGs do we have in our Airflow cluster?"

Claude will connect to your Airflow instance through the MCP server and list all available DAGs.

Expanded Airflow MCP Functionality

Let's explore more complex operations with Airflow MCP. We'll identify all DAGs that failed during their most recent execution and trigger them automatically, then monitor their status.

“Identify all DAGs with failed status in their most recent execution and trigger a new run for each one”

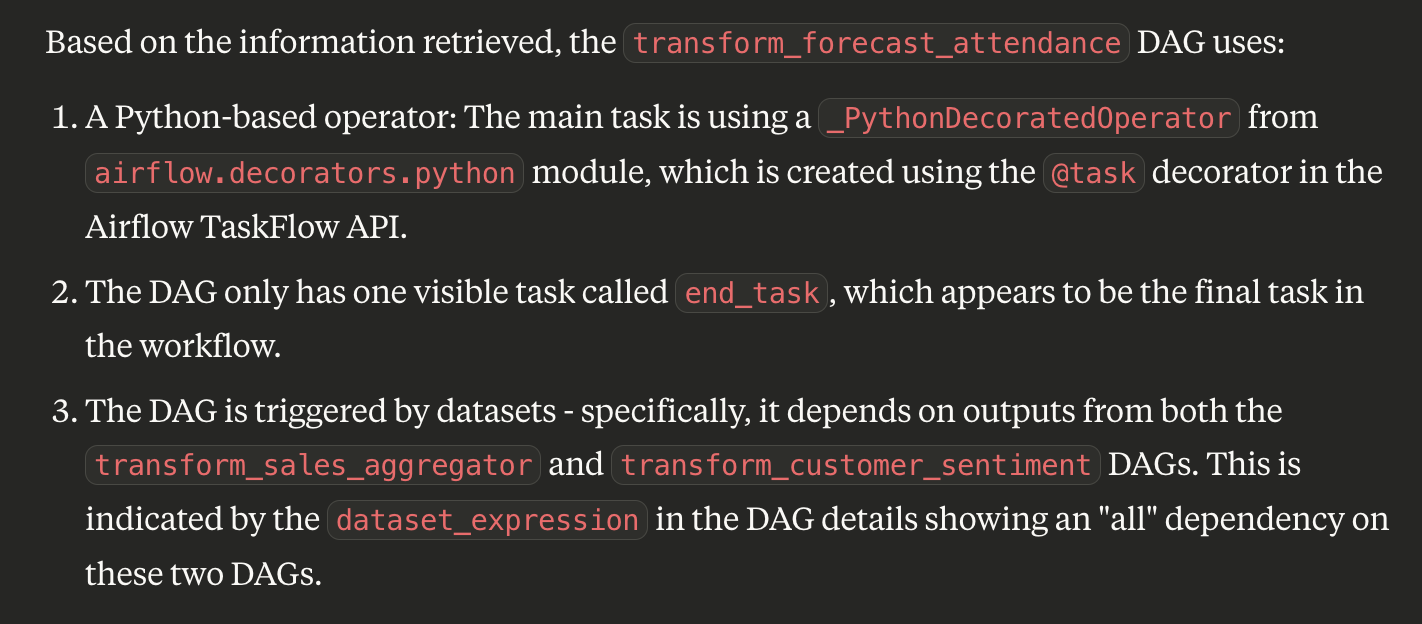

The analysis reveals that the transform_forecast_attendance DAG failed and was subsequently rerun. Checking the Airflow UI confirms it was successfully triggered and is now executing.

When we query the status of this new run, we discover it failed again.

“Did it succeed?”

This recurring failure prompted us to investigate which operators are being used by the DAG.

Interestingly, it employs only a single Python operator.

To understand the issue better, we checked its historical performance and found that this DAG has never completed successfully.

“Has this DAG ever succeeded?”

Such troubleshooting becomes significantly more streamlined with Airflow MCP. Note that throughout this entire process—retrieving Airflow metadata, identifying failed DAGs, triggering reruns, and analyzing DAG components—we accomplished everything directly through the MCP interface without having to access the Airflow UI.

Conclusion

The Airflow MCP represents a significant advancement in how data teams interact with their workflow orchestration. By bridging natural language processing with Airflow's powerful capabilities, we've eliminated countless hours spent navigating complex interfaces or writing custom scripts. But this is just the beginning of what's possible. The current functionality can be substantially extended. We enthusiastically invite the community to contribute to this open-source initiative—whether you're interested in adding new features, improving documentation, or enhancing compatibility with different LLM providers. Visit our GitHub repository at https://github.com/hipposys-ltd/airflow-mcp to join the project, submit pull requests, or share your ideas. Together, we can transform how teams manage and interact with their data pipelines, making Airflow more accessible and powerful than ever before.

In our next blog post, we'll dive into how to integrate Airflow MCP with the LangChain library and leverage it with various LLMs to unlock even more flexible and intelligent workflow orchestration.