Introduction

Recently, Trino introduced built-in AI functions ( docs ), similar to what Databricks offers. While this is a great step forward, there are some notable limitations.

First, Trino's AI functions don’t yet support AWS Bedrock—currently, they only work with OpenAI, Anthropic, and local models via Ollama. This means that if you want to use Claude while keeping your data within your VPC, you're out of luck.

Second, the implementation is somewhat rigid. The built-in functions rely on predefined prompts that are neither visible nor customizable, making it impossible to tweak prompts or debug responses. Moreover, there's no official way to create fully custom AI functions with your own prompts and parameters.

To overcome these limitations, we built a demo that integrates Redshift, AWS Lambda, and Bedrock to create custom AI functions. In this tutorial, we’ll not only replicate some of Trino's AI functions but also extend them with custom implementations tailored to specific use cases.

Lambda Functions in Redshift

Let's start by creating a simple Lambda function that can be used within Amazon Redshift.

Step 1: Set Up IAM Permissions

First, we need to create an IAM Role that allows Redshift to invoke Lambda functions.

Navigate to AWS IAM → Roles → Create Role.

Select Redshift - Customizable as the trusted service.

Attach an appropriate policy. For this tutorial, I created a policy that allows invoking all Lambda functions, but in a real-world scenario, you should restrict access to specific Lambda functions for better security.

Next, associate the IAM role with your Redshift cluster:

Open your Redshift cluster settings.

Choose Associate IAM Role and select the role you just created.

Now, your Redshift cluster can invoke Lambda functions!

Step 2: Create a Lambda Function

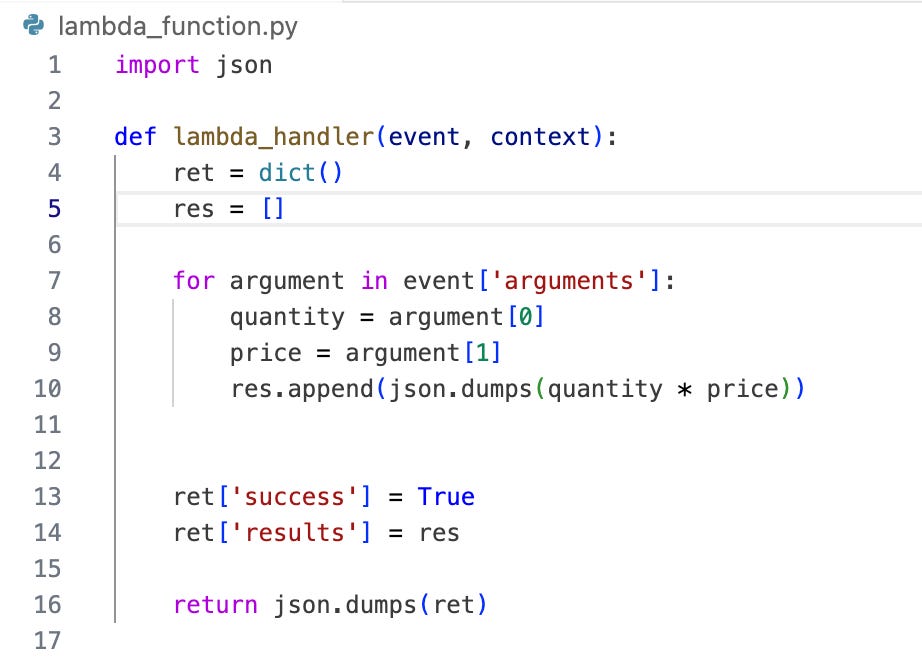

Let’s create a simple Lambda function called test_redshift_function. This function will take two input columns and return their product.

Step 3: Invoking Lambda from Redshift

To call a Lambda function from Redshift, we use external functions.

When defining an external function in Redshift, you need to:

Specify the IAM role with Lambda invocation permissions.

Link it to the corresponding Lambda function.

Define the expected input parameters.

Once the function is created, you can invoke it directly from Redshift queries!

Enhance Grammar with AI Functions

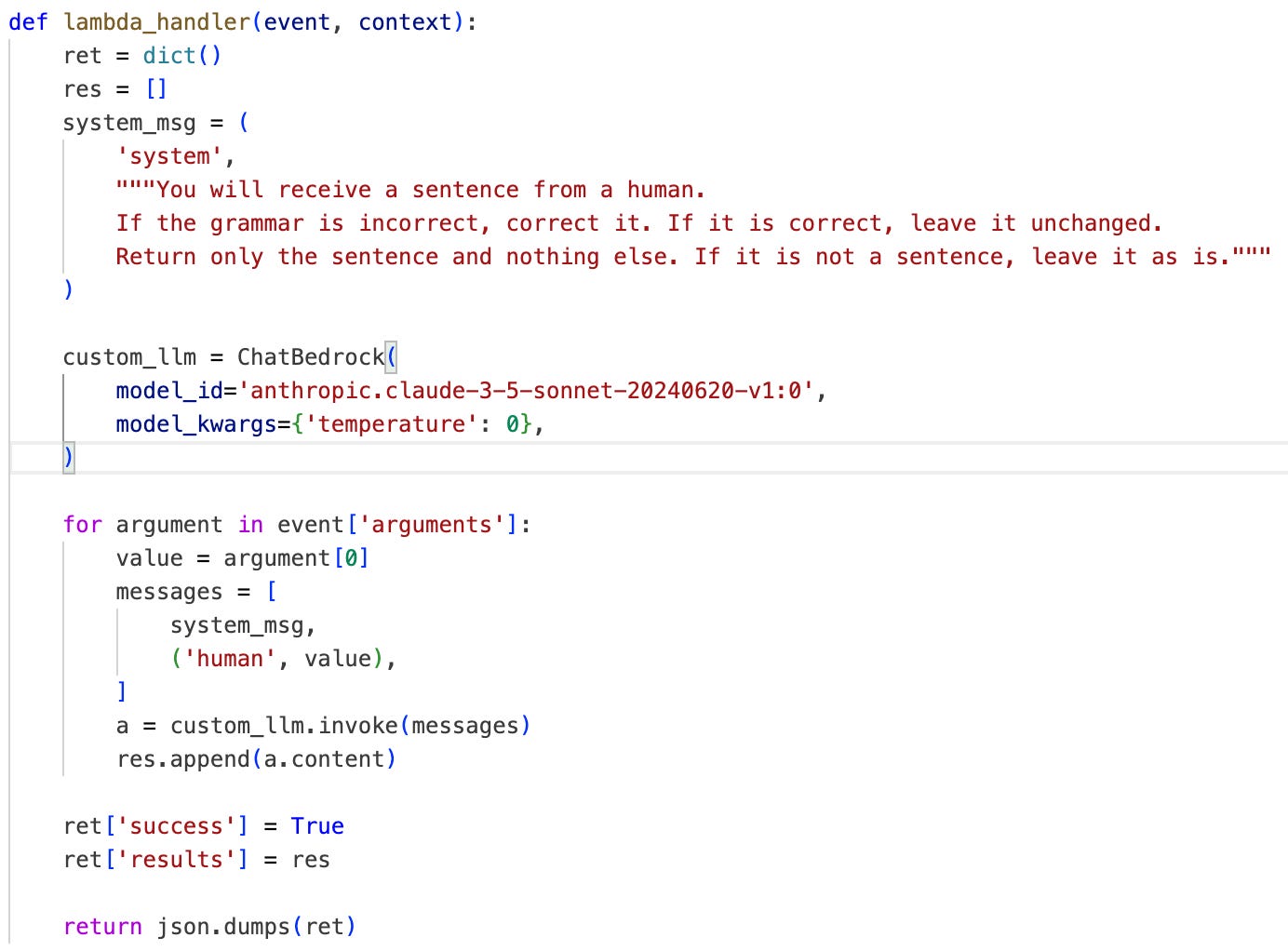

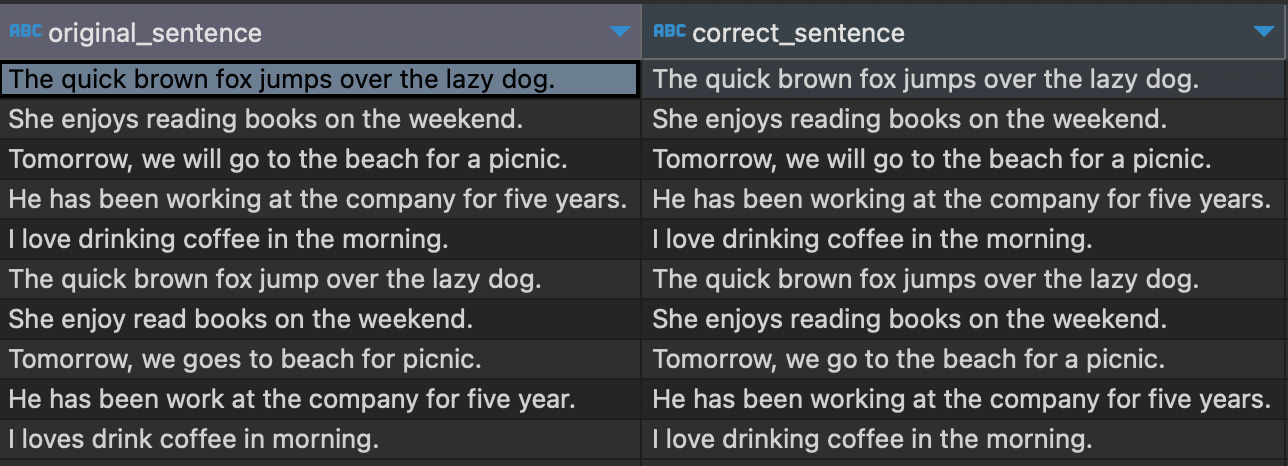

Trino introduced the ai_fix_grammar function, which takes a column of text strings and returns grammatically corrected sentences. Let's recreate a similar function ourselves using an AWS Lambda function with an x86_64 architecture.

We'll leverage the langchain library to call an AWS Bedrock model and use the following prompt to correct sentences with AI:

"You will receive a sentence from a human. If the grammar is incorrect, correct it. If it is correct, leave it unchanged. Return only the sentence and nothing else. If it is not a sentence, leave it as is."

Implementing the Function

Below is the complete code for our function:

While setting this up, we may encounter three common errors:

Error 1: Missing Library

Since we’re using langchain, we need to install it. The quickest way to package dependencies for Lambda is to install them locally and upload them as a ZIP file:

Install dependencies:

pip install -r requirements.txt -t . --platform manylinux2014_x86_64 --only-binary=:all: (The --platform manylinux2014_x86_64 flag ensures compatibility with our Lambda architecture.)Create a ZIP package:

zip -r {lambda_name}.zip .Deploy the function:

aws lambda update-function-code --function-name {lambda_name} --zip-file fileb://{lambda_name}.zip

Error 2: Timeout Issues

LLM calls can take longer than typical Lambda executions. Since the default timeout is relatively short, we should increase it:

Navigate to Configuration → General Configuration

Click Edit

Increase the Timeout value

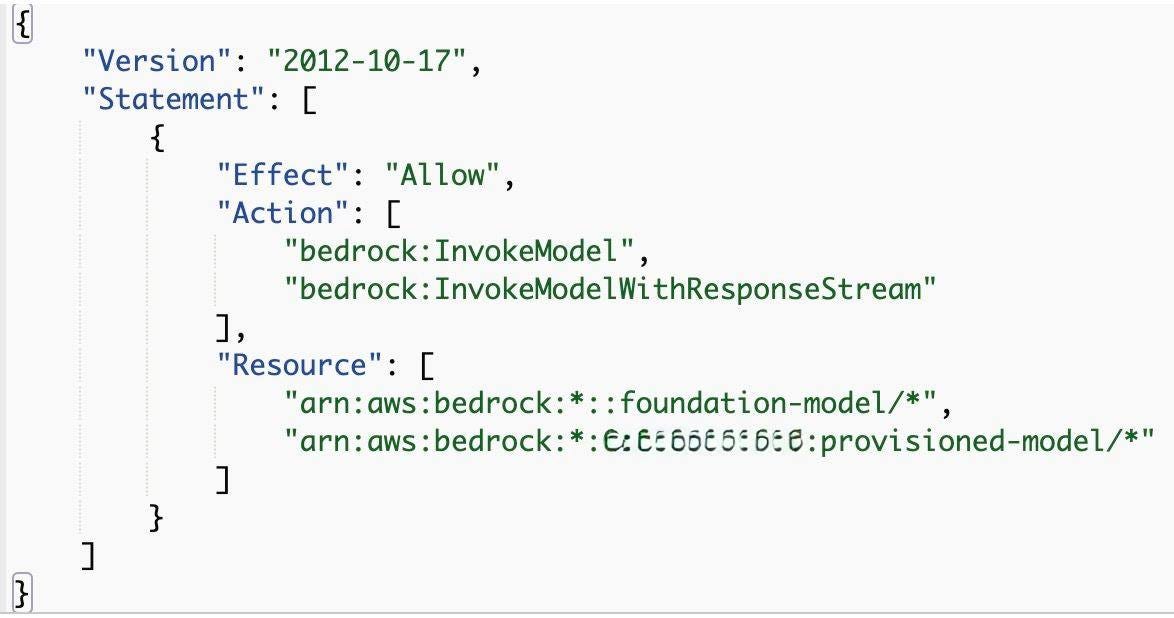

Error 3: Insufficient Permissions

To allow our Lambda function to invoke AWS Bedrock, we need to update its role:

Go to IAM Roles

Attach a policy that grants Bedrock invocation permissions

Final Steps

Once the Lambda function is deployed, we just need to create an external function as we did before—and it's ready to use! Some sentences will remain unchanged if they are already correct, while others will be automatically corrected by the AI.

AI Function with a Custom Prompt

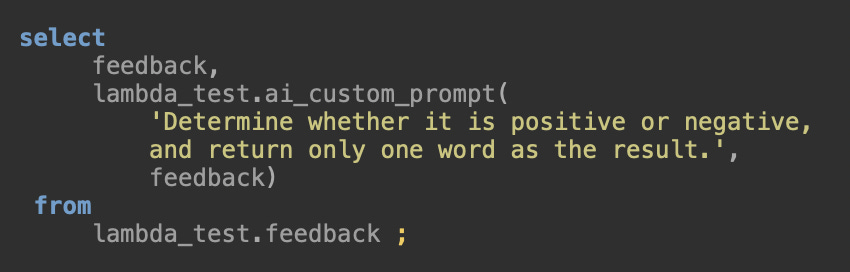

Now, let's take it a step further and allow our analysts to customize the LLM’s behavior by passing a prompt as a parameter. Here’s the code for our Lambda function:

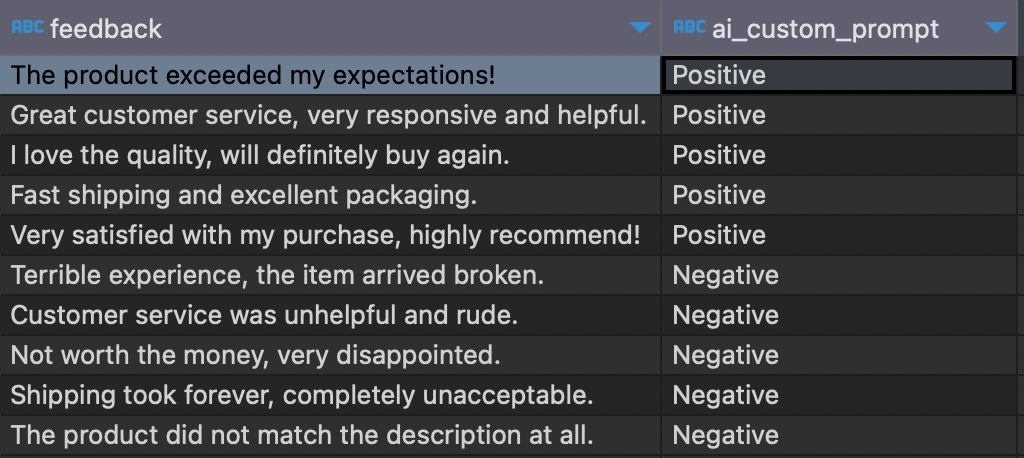

For this example, I created a "feedback" table. Let’s say we want to use the LLM to determine whether each feedback entry is positive or negative. To achieve this, we pass our custom prompt:

"Determine whether the feedback is positive or negative, and return only one word as the result."

Next, we provide the "feedback" column as input. The function processes each entry, and as expected, all feedback is correctly classified.

Conclusion

By integrating Amazon Redshift, AWS Lambda, and Bedrock, we’ve demonstrated how to create flexible, custom AI functions tailored to specific use cases. This approach not only allows analysts to modify prompts and debug outputs, but it also enables the addition of new AI-powered functions without waiting for official support from query engines like Trino.

Most importantly, using AWS Bedrock within a VPC ensures that no data ever leaves your secure environment, addressing privacy and compliance concerns. Whether you need grammar correction, sentiment analysis, or any other AI-powered transformation, this architecture provides a scalable, customizable, and secure foundation for AI-driven analytics within Redshift.