Introduction

Have you ever encountered a broken dashboard in Superset, Tableau, Power BI, Streamlit, or any other visualization tool—only to realize that someone deleted a model your dashboard relied on?

What if I told you that you could prevent such issues by writing your transformation tables in dbt, storing your dbt logic in Git, and adding automated tests using Trino + dbt?

In this post, I’ll show you how to implement this safeguard for Superset dashboards, using a real example from our public GitHub repository: superset-dbt-trino-guard. 🚀

Linking Dashboard Metadata to dbt Models

How can we connect dashboard metadata with our dbt metadata?

Most visualization tools store their metadata in a relational database. What if we could create a dbt model that captures our dbt metadata and then compare what our dashboards expect to receive with what actually exists?

In this tutorial, we use a Postgres database to store dbt objects, Superset with Postgres as its application database, and Trino + dbt to validate the connections.

Exploring the GitHub Repository

To begin, clone the repository from GitHub:

Ensure that Docker is installed on your system.

Setup Instructions

Start the environment by running the following command: docker-compose up

Launch the relevant applications by running: source all_the_apps.sh

This script will automatically open all necessary tabs in Google Chrome for you. Here's what each tab is for:

Tab 1: DBeaver Web Interface

This is the web version of DBeaver, which we’ll use to interact with MariaDB, PostgreSQL, and Trino.

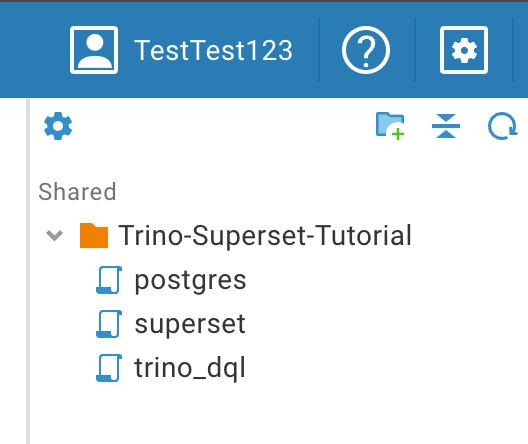

Click on Settings -> Login, and use TestTest123 as both the username and password.

Once logged in, you’ll see three connection folders on the left:

Superset: Connect using superset as both the username and password.

PostgreSQL: Connect using superset as both the username and password.

Trino: Connect using tutorial as the username and leave the password blank.

On the right-hand side, you’ll see folders containing SQL scripts that we’ll use shortly. If you don’t see them, try clicking the "Tools" menu at the top and selecting "Show Scripts".

Tab 2: Jupyter Notebook Interface

Inside the work folder, you’ll see:

A dbt folder containing all the required dbt code for both transformation and tests.

Notebooks with Python scripts that help you run the dbt projects and test metadata.

Tab 3: Superset

Access Apache Superset for data visualization and dashboarding.

Connect using superset_admin as the username and superset as the password.

Tab 4: Trino UI

In this tab, you can access the Trino UI by logging in with the username tutorial (leave the password blank).

Here, you’ll find metrics, metadata, and other information about the Trino query engine.

Setting Up the Superset Dashboard and Data Pipeline

To build a Superset Dashboard, we need to complete three key steps:

Create the source table

Run dbt transformations

Set up the Superset dashboard

Step 1: Creating the Source Table

Open the DBeaver tab, navigate to the postgres database, and execute the DDL/DML queries to create the public.customers table.

Step 2: Running dbt Transformations

Next, switch to the Jupyter Notebook tab and open the edw_dbt notebook. Run the transformation script to create the customers_202301 table.

The SQL code for this transformation is located in the customers_202301.sql file.

This script extracts all records from public.customers that were created in January 2023.

Step 3: Setting Up the Superset Dashboard

In the Superset tab, create a new connection to the PostgreSQL database.

Navigate to the Datasets tab and add a new dataset for the customers_202301 table.

Create a chart using this dataset (for simplicity, we’ll use a table chart).

Finally, create a dashboard and add the chart to it.

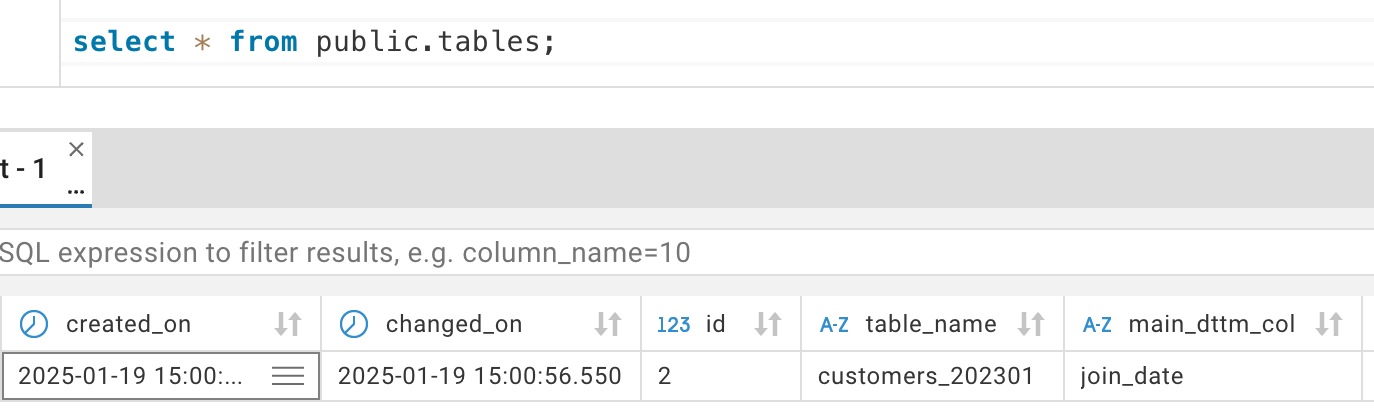

To verify the changes, return to DBeaver, open the Superset queries, and check the updates in the slices, dashboard_slices, and tables tables.

Now your Superset Dashboard is set up and ready to visualize data! 🚀

Building a dbt Metadata Model

Now, we want to create a dbt model that stores metadata about our dbt project.

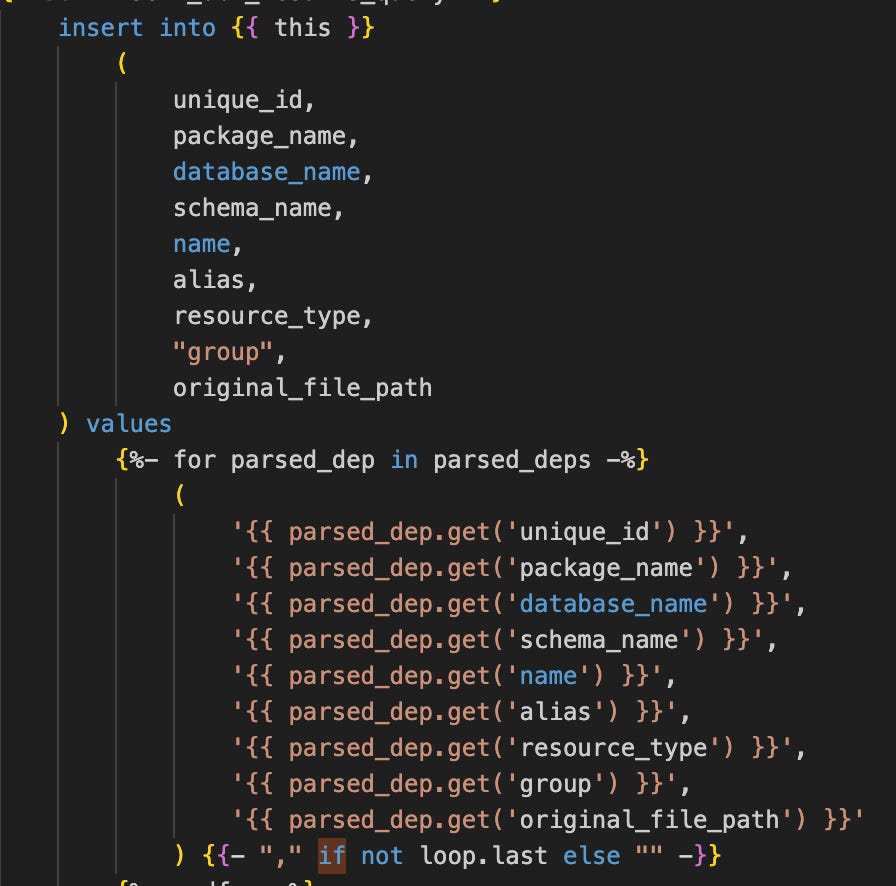

In the PostgresDbt project, next to the customers_202301 model, we will create a new model called metadata. This model will:

Define the DDL for the metadata table (without inserting any data initially).

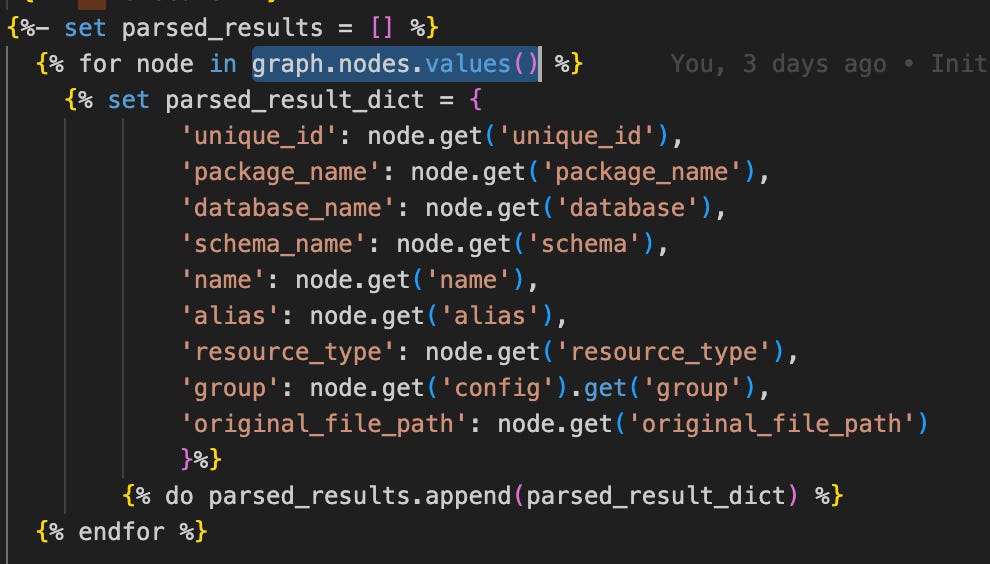

Use the insert_metadata macro as a post_hook to automatically insert metadata after the model runs.

The post-hook extracts metadata from the graph context variable, inserting details about all dbt models, including:

Schema

Model name

Alias

Group

And more...

Running the Metadata Model

Open the Jupyter Notebook tab.

In the edw_dbt notebook, run the script to build the metadata model.

Once the model is created, switch back to DBeaver and check the contents of the dbt.metadata table.

The metadata table should now contain information about both metadata and customers_202301 models, providing a structured overview of your dbt project.

Comparing Superset and dbt Metadata with Trino

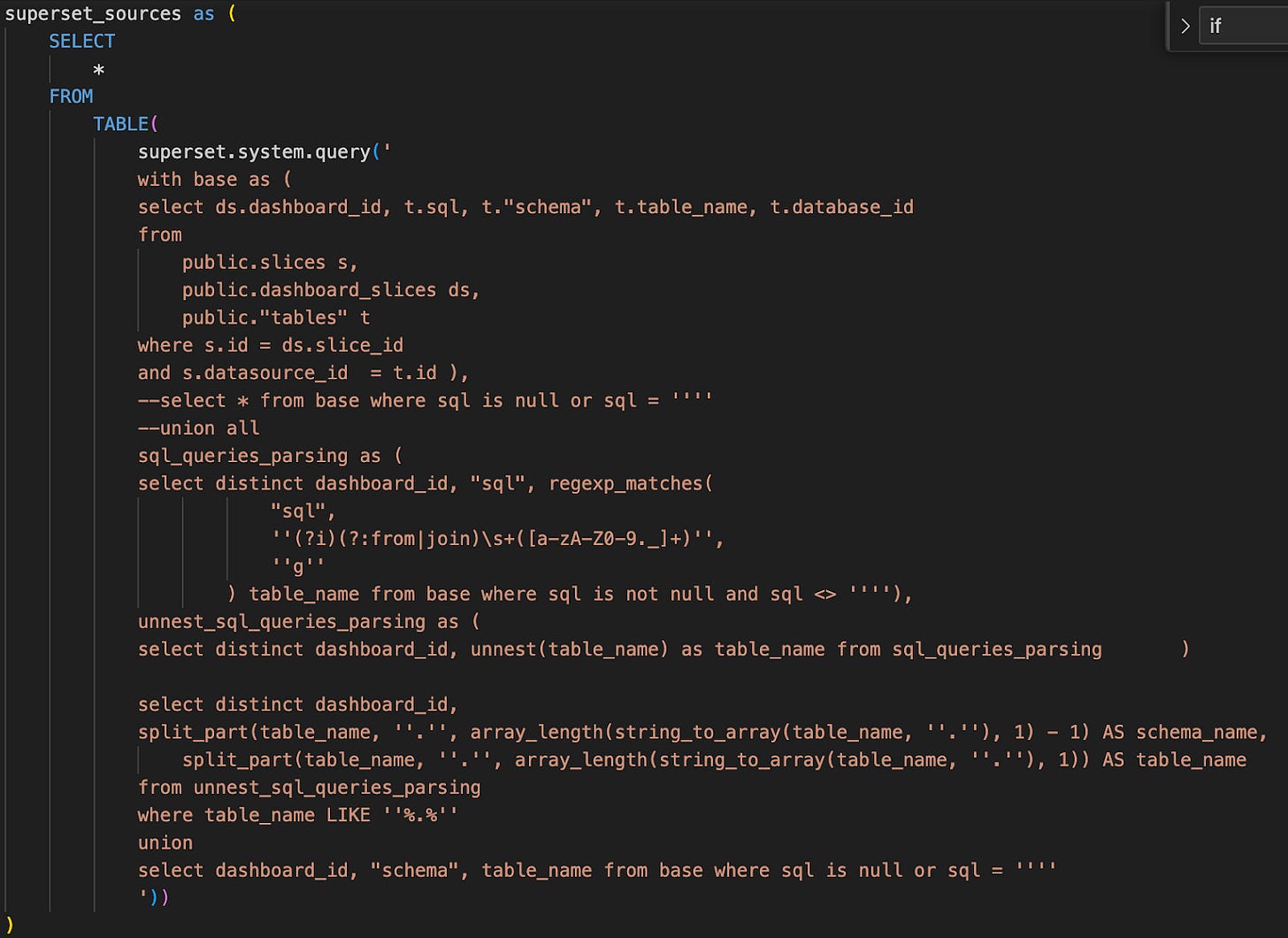

Now, let's open the TrinoDbt project and look at the test named superset_test. While it may seem complex at first, it's quite straightforward.

Step 1: Retrieving Superset Metadata

The first step is to extract metadata from Superset using the superset_sources CTE.

This CTE retrieves metadata about tables used across all Superset dashboards.

It may look complicated because it includes logic to parse datasets that are not simple tables but SQL queries instead.

Important Note: If you're using a different data visualization tool, this is the only part of the test you'll need to modify.

Step 2: Comparing Metadata

The test then extracts all objects from the dbt metadata table.

Step 3: Handling Schema Differences

Since we want to run this test before merging changes to production, we avoid using the production schema (dbt).

Instead, dbt assigns a custom schema for each PR, such as dbt_pr_236.

To ensure a proper comparison, we replace the dbt prefix with the custom schema before running the test.

If you have multiple environments, you can run your tests in a test instance of the database using the production schema, eliminating the need to replace prefixes.

It verifies that all objects retrieved from Superset (via superset_sources CTE) exist in the dbt metadata table.

Running the Test

Open Jupyter Notebook and navigate to test_superset.

Run the test, which first builds the metadata model within a custom schema.

Switch to DBeaver and verify that all objects now have custom schemas.

The script then executes the Trino test to compare metadata.

Verifying the Test

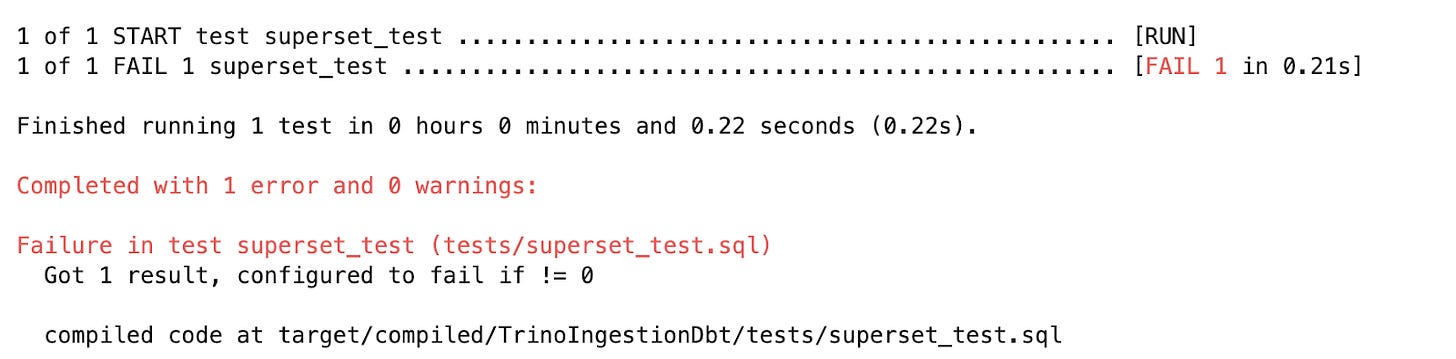

Now, try deleting the customers_202301 model and rerunning the test.

The test should fail, indicating that the model is missing.

Success! We caught an issue before deploying to production, without affecting the production schema.

Additionally, I created a GitHub workflow test to automate this validation:

📁 .github/workflows/tests.yml

Conclusion

By integrating dbt metadata validation with Trino, we can proactively prevent broken dashboards in Superset. This approach ensures that all required transformation models exist before deploying changes, reducing downtime and improving data reliability.

Although this tutorial focused on Superset, the same methodology applies to any visualization tool that stores metadata in a relational database, such as Tableau, Looker, or any other BI tool that uses a relational database as its metastore.

Furthermore, by incorporating this validation into a CI/CD pipeline with GitHub Actions, we can automate metadata checks, catching issues before they reach production. This enables teams to confidently develop and maintain their data pipelines while ensuring that dashboards remain accurate and fully functional.