Introduction

After recently launching our new library, airflow-chat—a plugin that enables seamless LLM chat interactions within your Airflow instance via our Airflow MCP—we quickly turned our attention to an important question: how do we effectively observe and log these conversations?

Imagine a scenario where a user triggers an unintended action due to safe mode being disabled, leading to a production error. How do you trace the issue? Who initiated the command? What was the exact prompt that caused the failure? Or more generally—how do you evaluate LLM interactions over time?

To address these questions, we turned to Langfuse, an elegant open-source tool purpose-built for developing, monitoring, evaluating, and debugging LLM applications. In this blog post, we’ll walk you through integrating Langfuse into your LangChain workflow—and specifically, how we’ve already done this within the airflow-chat plugin. You can follow along using our repo: https://github.com/hipposys-ltd/airflow-mcp-plugins.

Quickstart

Let’s walk through a quick setup to see how Langfuse integrates with the airflow-chat plugin.

1. Clone the Repository and Set Up Locally

Start by cloning our GitHub repository:

git clone https://github.com/hipposys-ltd/airflow-mcp-pluginsThen follow the setup steps outlined in our airflow-chat blog post to get the plugin running in your local environment.

Note: Running both Airflow and Langfuse locally can be resource-intensive. If your machine starts lagging, consider connecting to pre-deployed instances of Airflow and Langfuse for a smoother experience.

To launch both locally, run:

just airflow_langfuseOnce the containers are up, head to

http://localhost:3000/and sign up for a new Langfuse account.

2. Create an Organization, Project, and API Key

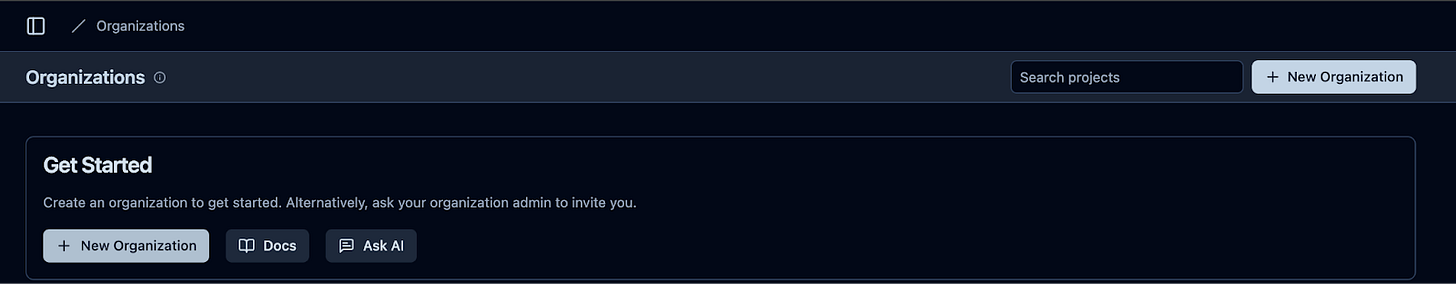

After signing up:

Create a new Organization

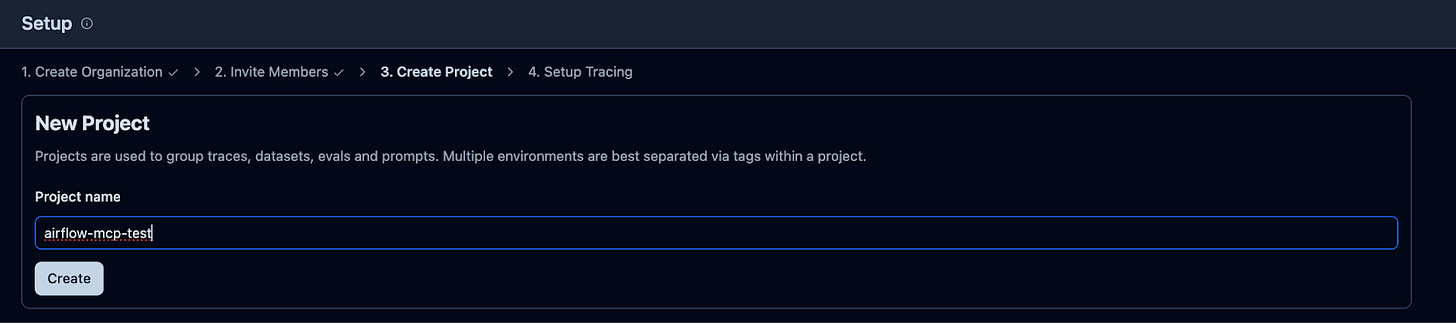

Create a Project under that organization

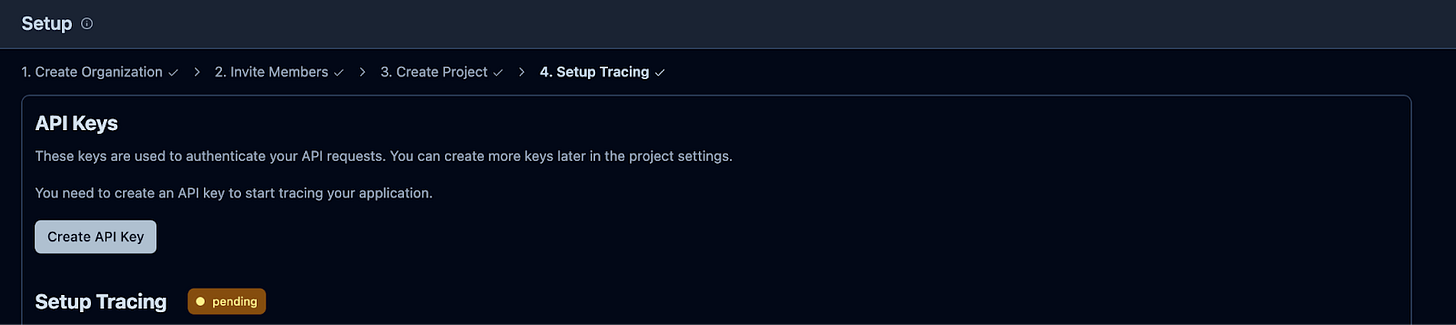

Generate an API key

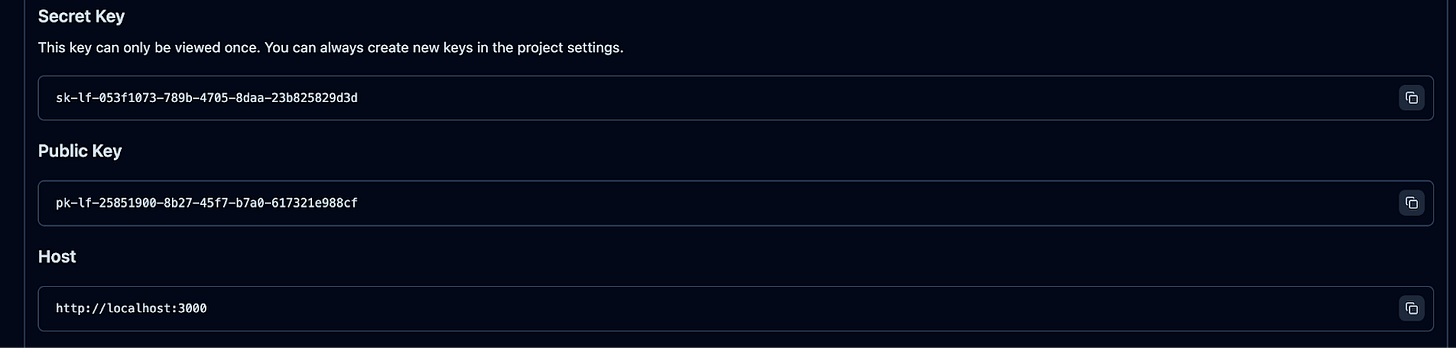

Copy the credentials and add them to your Airflow environment variables. If you’re using our GitHub setup, you can simply paste them into the airflow-plugin.env file:

LANGFUSE_SECRET_KEY=sk-lf-053f1073-789b-4705-8daa-23b825829d3d

LANGFUSE_PUBLIC_KEY=pk-lf-25851900-8b27-45f7-b7a0-617321e988cf

LANGFUSE_HOST=http://host.docker.internal:30003. Restart and Explore

Restart your environment:

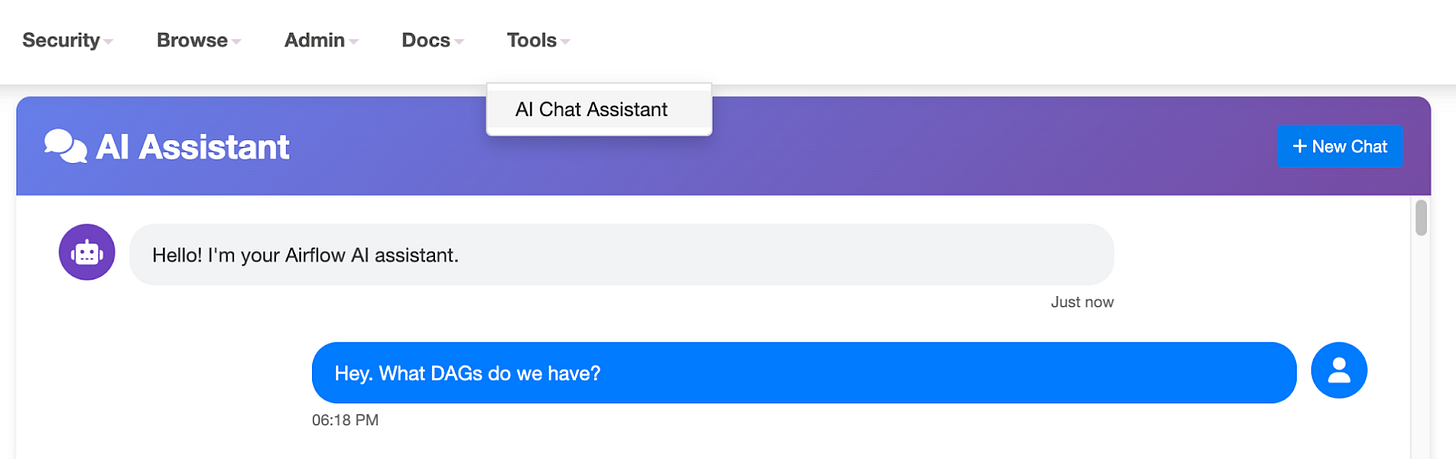

just airflow_langfuseNow, navigate to the Airflow UI, interact with the airflow-chat plugin, and ask a few test questions.

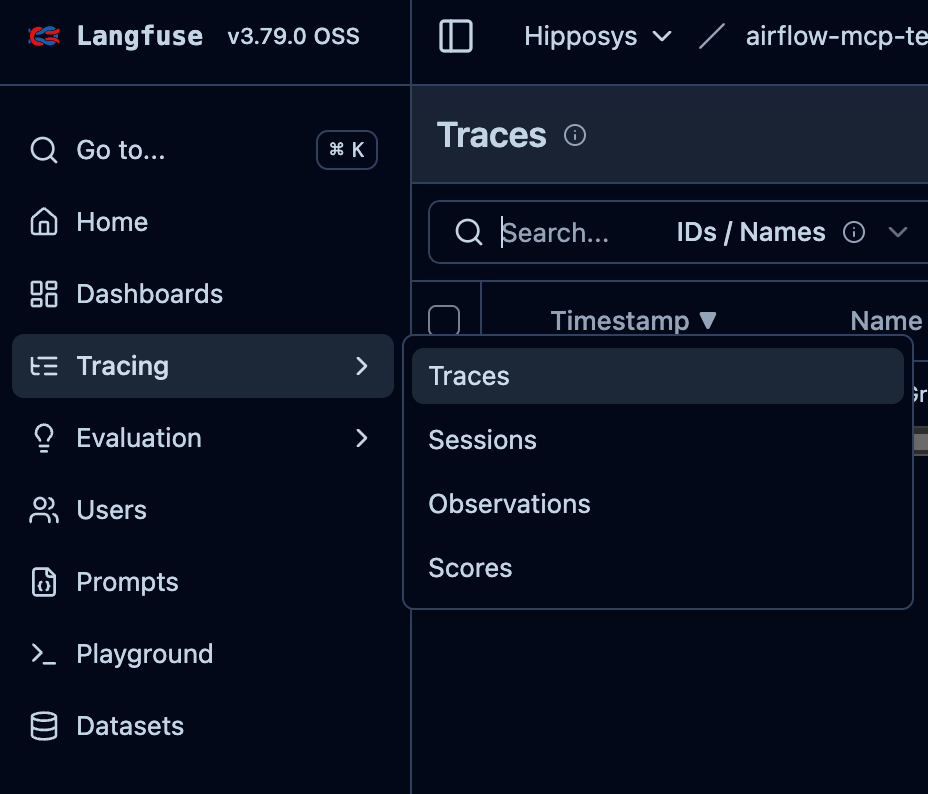

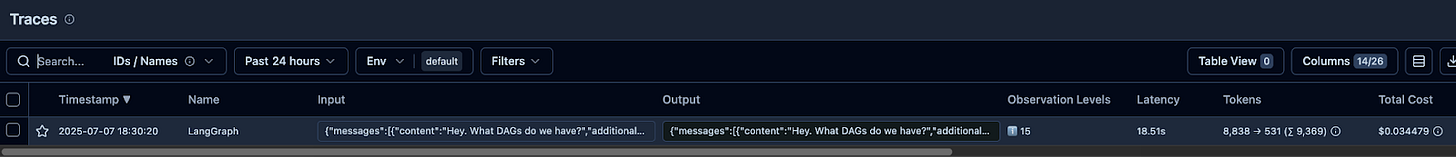

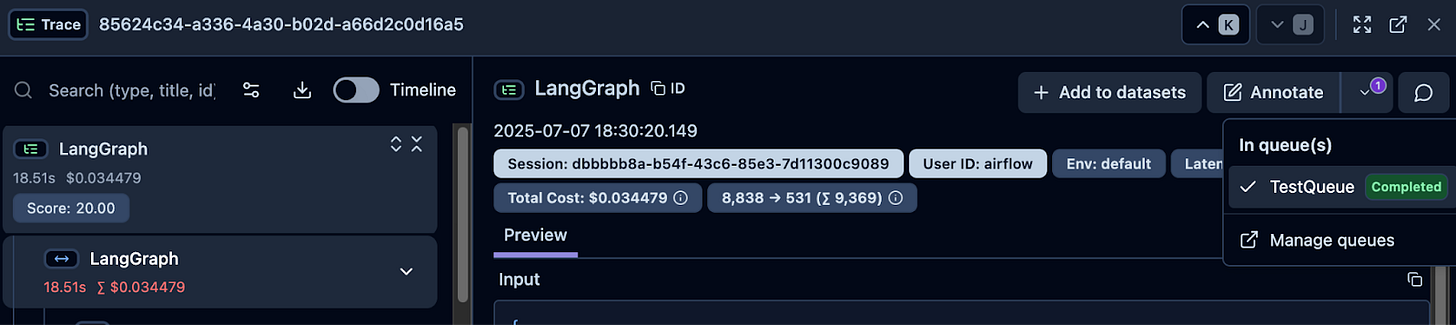

Then head over to the Langfuse dashboard. Open the "Tracing → Traces" tab:

Voilà—your entire chat session is logged, complete with trace-level details.

Just like that, observability is in place!

Going Further with Langfuse

Langfuse offers much more than just trace logging—it provides powerful tools to evaluate and improve your LLM application.

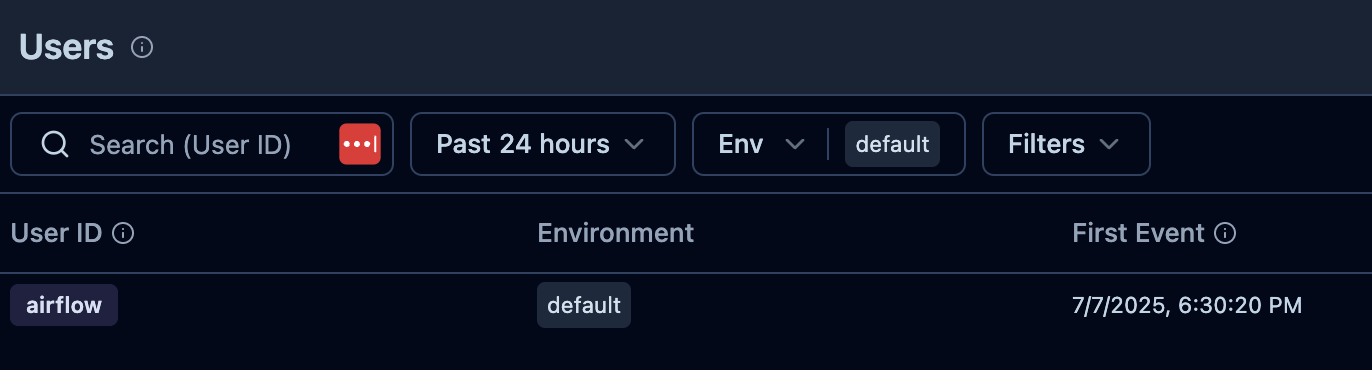

Users

Navigate to the Users tab to see a list of application users. You’ll notice a user named "airflow"—this represents interactions made through the airflow-chat plugin. Click on it to view all associated sessions. This is a great way to monitor how your team is using the plugin over time.

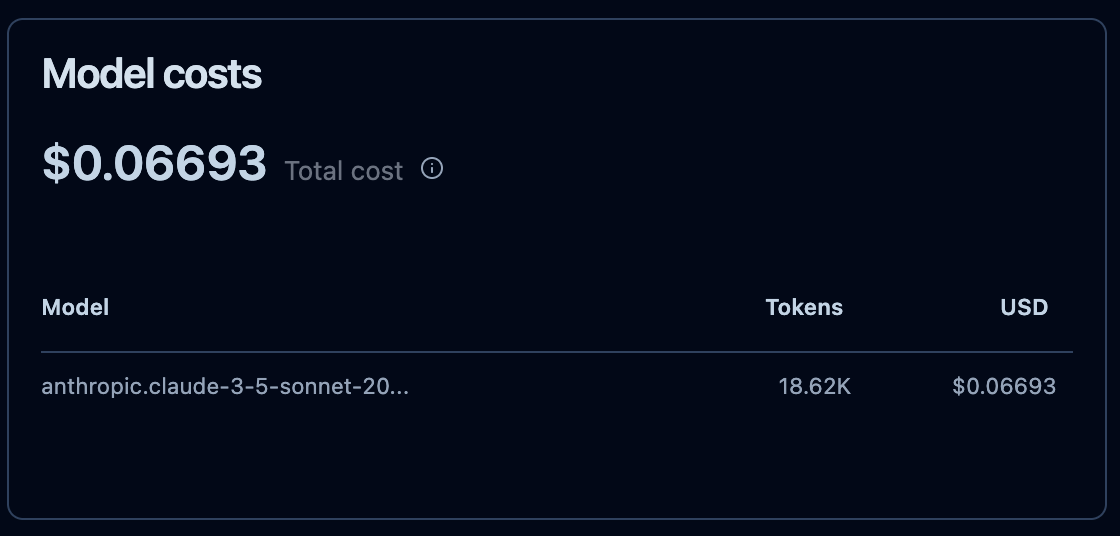

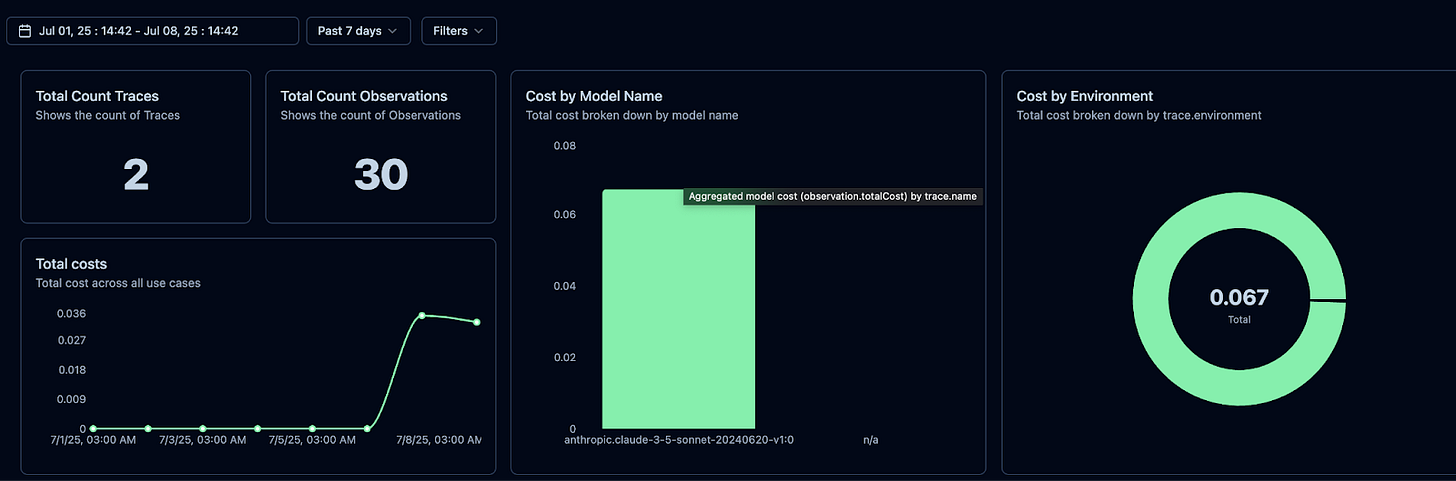

Cost Tracking

Langfuse makes it easy to track costs. In the Traces tab, you’ll see session-level costs, while the Home tab gives you an overview of model-level expenses. For deeper insights, head over to the Dashboards tab and explore the built-in Langfuse Cost Dashboard, or create a custom dashboard with your own cost graphs.

If you suspect that token prices are incorrect, go to Settings → Models, and either “Add model definition” or “Clone” an existing one to customize pricing.

Evaluations

Langfuse supports two types of evaluations: Human Annotation and LLM-as-a-Judge.

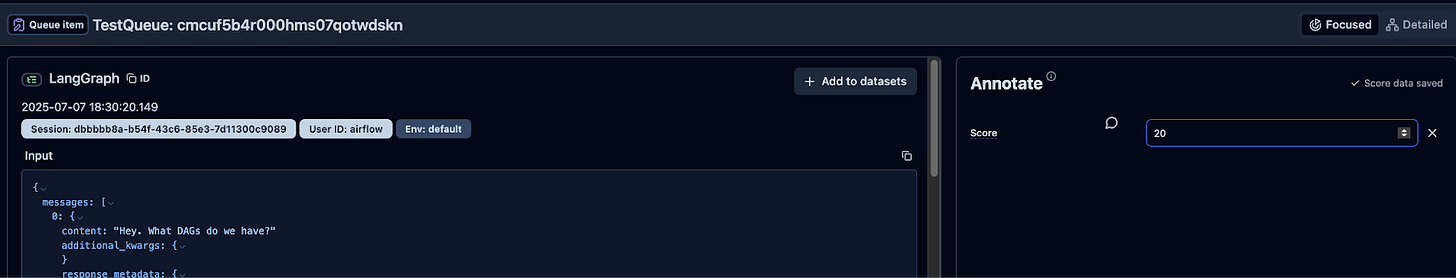

Human Annotation

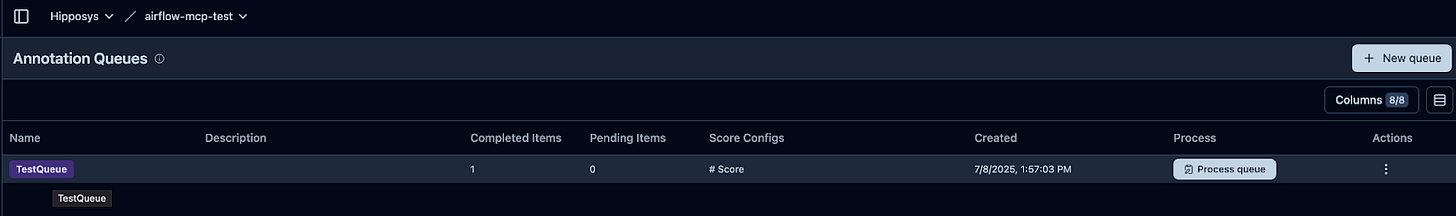

Go to the Scores tab and create a new score. Next, create an Annotation Queue, and then add traces to the queue via the Traces tab. You can now manually evaluate each session. While this method offers fine-grained control, it can be time-consuming.LLM-as-a-Judge

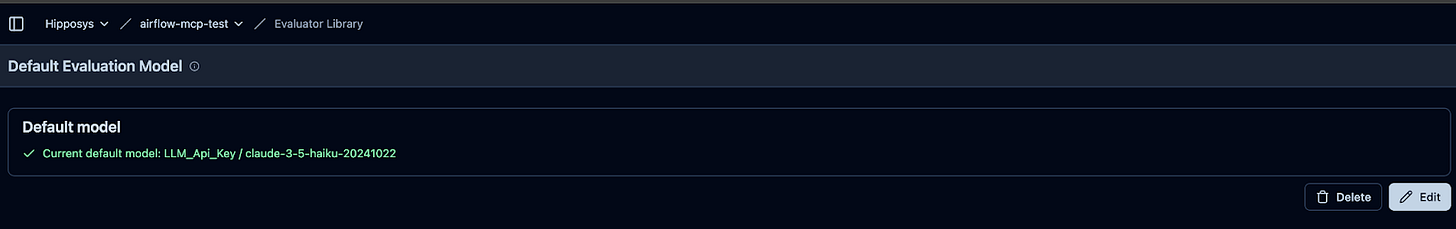

To automate evaluations, go to Settings → LLM Connection and add a model connection.

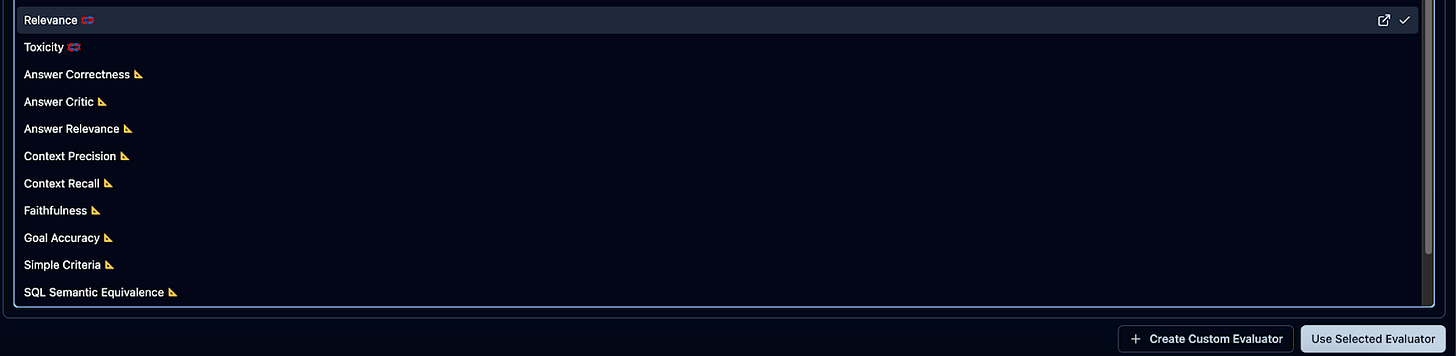

Then create a custom evaluator by navigating to Create Custom Evaluator → Create New Evaluator, and set up a Default Evaluation Model.Choose a metric—such as Relevance—and specify whether you want to evaluate existing traces or only new ones.

For inputs and outputs, map query to input and generation to output.

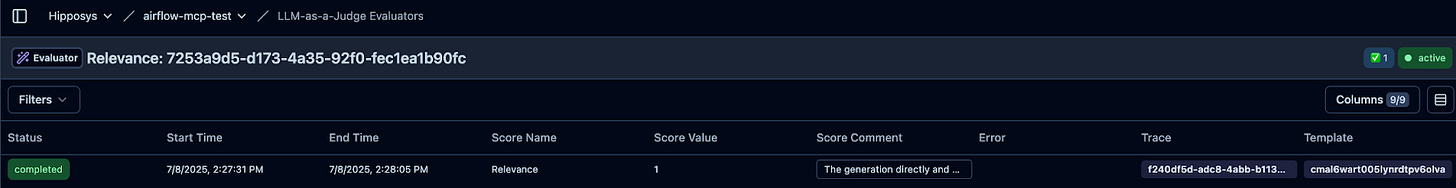

Now, go back to Airflow and ask a question. Check the Evaluation Logs and you’ll see a score and comment like:

“The generation directly and comprehensively answers the user's query by listing all the DAGs, providing their names, and offering additional context about their purpose and scheduling. The response is focused, informative, and adds significant value to the user's understanding of the available DAGs in the Airflow environment.”

This demonstrates how Langfuse helps you evaluate, measure, and continuously improve your LLM-powered apps.

Setting Up Langfuse for Your LangChain Application

Integrating Langfuse into your LangChain app is straightforward. The configuration is handled in the airflow_chat/plugins/app/server/llm.py file, specifically within the get_user_chat_config function:

def get_user_chat_config(session_id: str, username: str = None) -> dict:

chat_config = {

'configurable': {'thread_id': session_id},

'recursion_limit': 100

}

if os.environ.get('LANGFUSE_HOST'):

langfuse_handler = CallbackHandler(

user_id=username if username else session_id,

session_id=str(session_id),

public_key=os.environ.get('LANGFUSE_PUBLIC_KEY'),

secret_key=os.environ.get('LANGFUSE_SECRET_KEY'),

host=os.environ.get('LANGFUSE_HOST')

)

chat_config['callbacks'] = [langfuse_handler]

return chat_configThis function builds the chat configuration and conditionally attaches the Langfuse callback handler if the environment variables are present.

The resulting configuration (chat_config) is then passed to the astream_events method like this:

async for event in self._agent.astream_events(

{"messages": [HumanMessage(content=message)]},

config=chat_session,

version='v2',

)

The _agent object itself is initialized using the create_react_agent utility from LangGraph:

from langgraph.prebuilt import create_react_agent

self._agent = create_react_agent(

self._llm,

tools,

checkpointer=checkpointer,

prompt=PROMPT_MESSAGE

)

All of this logic can be found in the airflow_chat/plugins/app/server/llm.py file. This setup enables seamless event streaming and tracking via Langfuse within your LangChain-based application.

Summary

By combining the airflow-chat plugin with Langfuse, we’ve created a robust observability stack for LLM-powered workflows within Airflow. From real-time trace logging and cost tracking to advanced evaluation methods like LLM-as-a-judge, Langfuse brings clarity and control to your AI interactions. Whether you're debugging unexpected prompts, monitoring usage, or analyzing performance, Langfuse helps you iterate with confidence. For a deeper dive into all the features Langfuse offers, visit https://langfuse.com.