Airflow Schedule Insights: How Dr. Movie Tackled DAG Scheduling Challenges

How Airflow Schedule Insights Helped Solve Scheduling Failures, Over-parallelization, and Workflow Delays for Dr. Movie’s Data Engineering Team

Introducing the Airflow Schedule Insights Plugin

Apache Airflow users often face challenges when managing complex scheduling workflows. Today, we’re excited to introduce Airflow Schedule Insights—a new open-source plugin that helps data engineers optimize Airflow schedules, gain visibility into their DAG runs, and prevent scheduling issues before they occur.

Dr. Movie, an imagined leading cinema chain, relies heavily on Apache Airflow to orchestrate their data workflows. However, their data engineering team faced significant scheduling issues that disrupted operations:

Some DAGs failed to run.

Some DAGs didn’t even start running.

Others ran too many tasks in parallel, causing resource bottlenecks.

Frequent delays in certain workflows left clients and project managers frustrated.

Dr. Movie’s data platform includes load DAGs like load_box_office_data, load_ticket_sales, load_streaming_data, and load_customer_feedback, as well as transformation DAGs like transform_box_office_insights, generate_customer_segments, calculate_streaming_metrics, and others.

With mounting pressure from stakeholders, the team needed a way to:

Understand which DAGs wouldn’t run (without waiting for them actually not running—they wouldn't even fail).

Identify and address the reasons behind these issues.

Simulate schedule changes to reduce parallel runs and optimize resource usage.

Analyze past DAG failures and their frequency.

View upcoming run times for specific DAGs to set clear expectations with stakeholders.

The team at Dr. Movie used Airflow Schedule Insights to address these challenges and optimize their workflows. The plugin was compatible with their Postgres metadata database, allowing them to gain better visibility and control over their Airflow scheduling. However, if Dr. Movie had used a different metadata database, they could have submitted a feature request via GitHub Issues to extend support for their specific setup.

To install or to see the code for Airflow Schedule Insights, visit the GitHub repository or check out the PyPI page.

Let’s see how the data engineers at Dr. Movie leveraged Airflow Schedule Insights to overcome these challenges and streamline their workflows!

Installing the Plugin

To resolve their scheduling issues, Dr. Movie's engineers added airflow-schedule-insights to their Airflow requirements file and restarted the Airflow webserver. Once installed, the plugin was ready to enhance their workflow monitoring and scheduling capabilities.

Getting Started with the Plugin

To begin using the Airflow Schedule Insights plugin, the data engineers at Dr. Movie navigated to their Airflow menu and found the Schedule Insights option under the Browse section.

Timezone

Upon opening the plugin, they noticed their local timezone, Jerusalem, displayed at the top of the page. This was especially helpful, as translating all times from UTC had been a constant hassle for them.

Gantt Chart

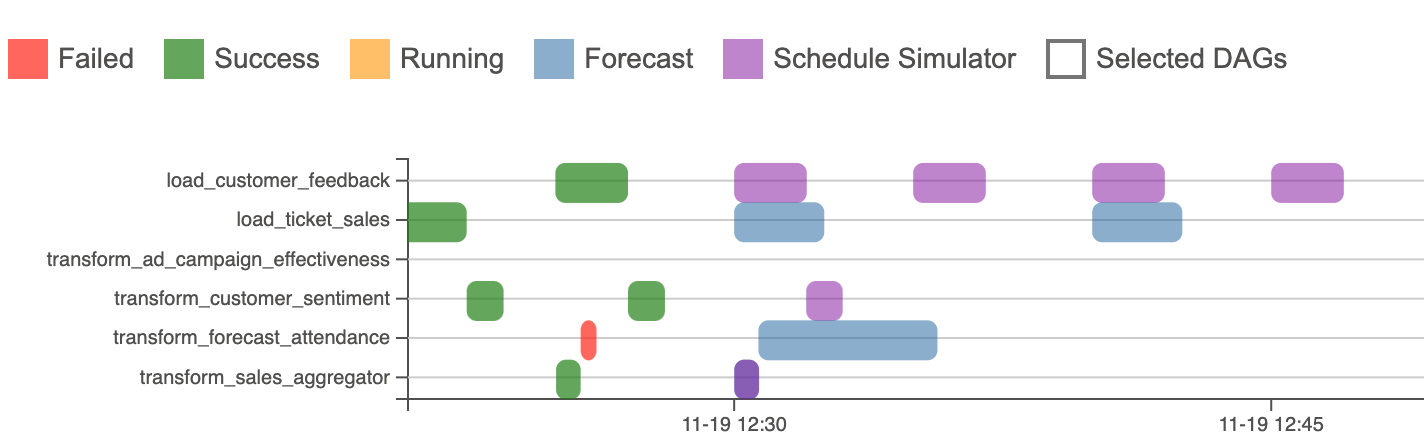

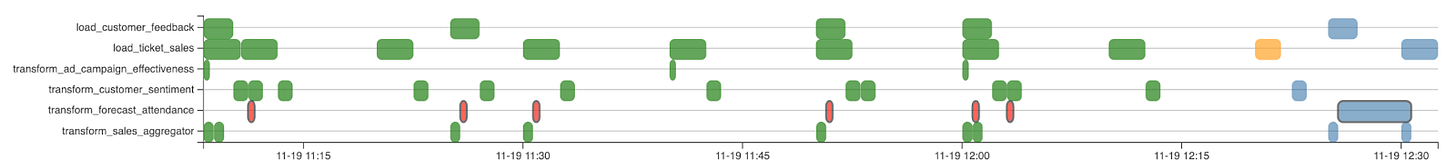

The engineers first wanted to review previous logs for a specific time range, so they adjusted the time filter at the top. They then selected the transform_forecast_attendance DAG, which had failed the previous night. Their goal was to analyze how often it failed and what other DAGs had run at the same time, potentially causing conflicts.

After reviewing the data, they realized that the transform_forecast_attendance DAG failed consistently, regardless of other DAG runs. This indicated that the issue lay within the DAG's logic itself, rather than resource contention or parallel execution problems.

Additionally, by changing the end time in the time filter, they could also view future runs in the Gantt chart.

For event-based DAGs (triggered by datasets or external triggers), only the next predicted run is shown, as forecasting beyond that could lead to inaccuracies.

Next Run Table

After addressing the issue with the transform_forecast_attendance DAG, the data engineers at Dr. Movie shifted their focus to understanding why several other DAGs, including load_box_office_reports, load_movie_inventory, transform_inventory_optimization, and transform_loyalty_program_analysis, had not run and whether they would run in the future. To investigate, they navigated to the Next Run table in the plugin.

They quickly identified the root causes:

The load_box_office_reports DAG was paused, so it wouldn’t run until they unpaused it.

The load_movie_inventory DAG was not paused but had no schedule or dependencies, meaning it would only run if triggered externally.

The transform_inventory_optimization DAG was paused, which prevented the transform_loyalty_program_analysis and transform_movie_performance DAGs from running, because of unmet dependencies.

Once the engineers unpaused the transform_inventory_optimization DAG, all three dependent DAGs—transform_loyalty_program_analysis, transform_movie_performance, and transform_inventory_optimization—were triggered to run as expected.

The specific reason for a DAG not running will be clearly outlined in the Description column of the Next Run table.

After resolving the issues with the paused DAGs, the engineers at Dr. Movie wanted to check the next scheduled runs for the load_customer_feedback, transform_customer_sentiment, and transform_sales_aggregator DAGs, as well as what would trigger them.

They turned to the Next Run table for this information. The load_customer_feedback DAG was scheduled to run every 25 minutes.

The transform_customer_sentiment DAG would be triggered by a Dataset update.

The transform_sales_aggregator DAG, on the other hand, would be triggered by a Trigger operator.

The specific trigger for this DAG was clearly outlined in the Description column of the Next Run table, providing clarity on when and how each DAG would be triggered.

All Future Runs

Afterwards, the engineers wanted to see the next future run within the time range they had chosen. Instead of focusing on a specific DAG, they wanted to identify which DAG would run next. To do this, they checked the "All Future Runs" table, where all future runs were ordered by their start time. For event-based DAGs (triggered by datasets or external triggers), only the next predicted run is shown, as forecasting beyond that could lead to inaccuracies. The trigger reason for each run was clearly listed in the "Trigger" column. It turns out that transform_customer_sentiment is the next DAG to run, and it will be triggered by a dataset.

Using the Schedule Impact Simulator

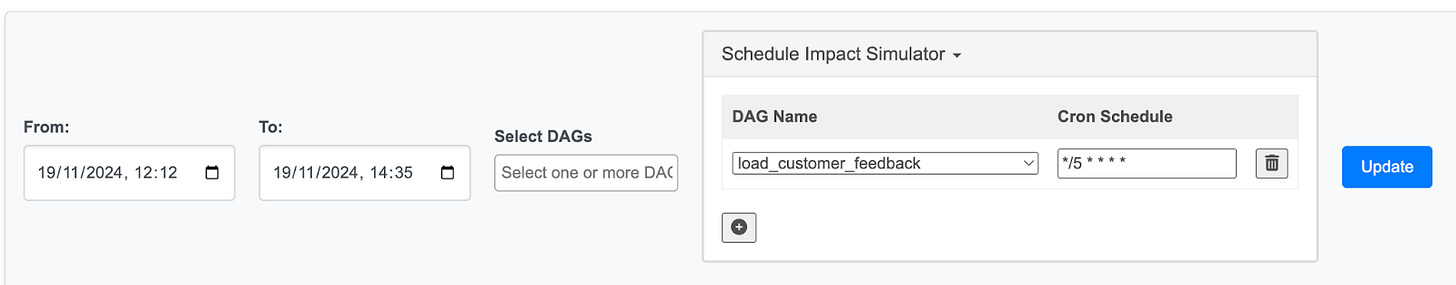

The Schedule Impact Simulator is a powerful tool within the Airflow Schedule Insights plugin that allows you to experiment with DAG schedules without altering them in your actual Airflow instance. This feature helps visualize how schedule changes might affect future runs of the selected DAG and its dependent DAGs.

To use the Schedule Impact Simulator, the engineers first added the DAG with a new cron expression to the corresponding table in the filter form at the top of the page.

They wanted to check what would happen if they changed the schedule of load_customer_feedback to run every 5 minutes instead of every 25. By using the simulator, they could see the new schedule for that DAG on the Gantt chart and how it impacted the schedules of other DAGs (highlighted in purple). Both transform_customer_sentiment and transform_sales_aggregator had their schedules adjusted as well.

In both the Gantt chart and the tables, these runs were marked as "simulator," indicating that these changes were simulations and not actual modifications to the live DAG schedules.

API

To ensure other teams could access the scheduling insights programmatically, Dr. Movie's data engineering team made use of the Airflow Schedule Insights plugin’s API methods. These methods allowed them to retrieve relevant scheduling data outside of the Airflow UI, enabling other teams to integrate this information into their systems.

schedule_insights/get_future_runs_json

This method returns the "All Future Runs" table in JSON format, which includes all the future runs for the selected DAGs.

Parameters:start (Optional): The start date for filtering the future runs.

end (Optional): The end date for filtering the future runs.

timezone (Optional): The timezone for formatting the dates in the response.

dag_id (Optional): The ID of a specific DAG to filter the results by.

schedule_insights/get_next_future_run_json

This method returns the "Next Run" table in JSON format, including details about the next scheduled run for each DAG and its trigger type.

Parameters:dag_id (Optional): The ID of a specific DAG to filter the results by.

By using these API endpoints, other teams at Dr. Movie could easily fetch the next scheduled runs for all DAGs or specific ones, automating their processes without having to manually check the Airflow UI.

Conclusion

In conclusion, the Airflow Schedule Insights plugin transforms how you visualize, predict, and manage DAG runs within your Airflow environment. With its intuitive interface, advanced prediction capabilities, and the powerful Schedule Impact Simulator, it equips you to better understand and optimize your pipeline schedules. Whether you're working with cron-scheduled DAGs or those triggered by datasets and dependencies, this plugin provides the tools to ensure smoother workflows and fewer scheduling conflicts.

To get started, check out the plugin on PyPI and explore the source code on GitHub.